TL;DR: Key takeaways

Quick summary for creators who need fast answers. You'll learn how an ai anime voice generator creates character voices, manages voice cloning, and handles dubbing with subtitles.

-

What you'll learn: step-by-step voice creation, cloning workflow, dubbing, subtitle export, pricing, and legal checklist.

-

Verdict: AI voices speed production and keep character tones consistent, but expect manual tuning and rights checks for commercial use.

-

Who should try it first: indie animators, visual novel devs, YouTubers, podcast producers, and localization teams testing small runs.

-

Quick tech tips: start with short test clips, use style tags for emotion, record clean source audio for cloning, and export MP3/WAV plus SRT for subtitles.

-

Immediate next step: make one demo voice, run a short commercial-rights checklist, and iterate on delivery and timing.

What is an AI anime/storytelling voice generator?

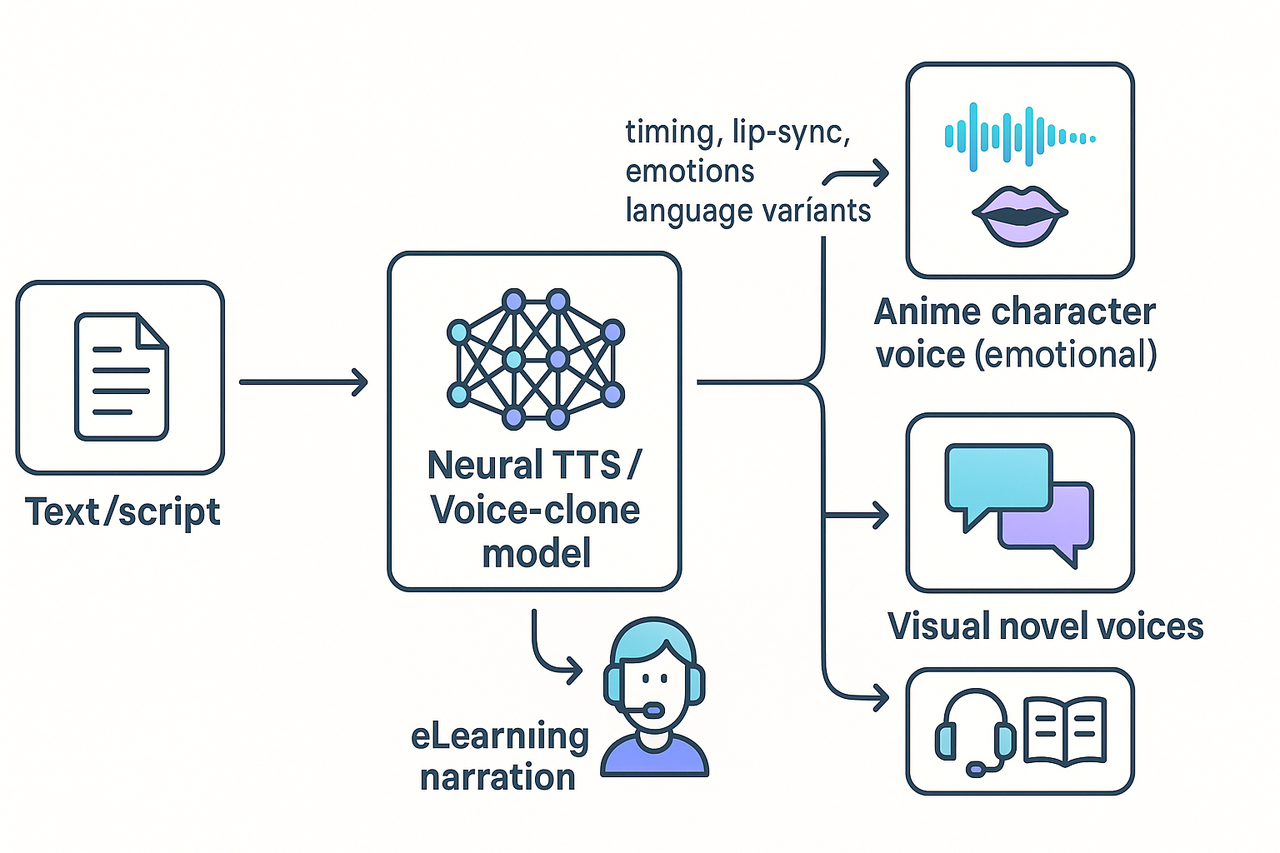

An AI anime voice generator turns written lines into stylized character speech. It uses neural text to speech and voice cloning to produce voices that sound like anime characters or bespoke narrators. This section explains the key tech and where creators use these tools.

Core tech: neural TTS and voice cloning

Neural text to speech, or neural TTS, converts text into natural-sounding audio using deep learning models. Voice cloning copies a target speaker’s vocal traits from short reference clips. Voice cloning enables the synthesis of speech that closely mimics a target speaker's voice using only a few seconds of reference audio, as shown in

Voice Cloning: Comprehensive Survey. Together, these systems let you make new lines, match timing, and add emotion.

How creators use it

Common use cases include:

-

Anime dubbing: create character voices and lip-sync lines for scenes.

-

Visual novels and games: supply multiple character voices without large casting budgets.

-

eLearning narration: produce consistent, clear lesson voices in many languages.

-

Podcast characters and serialized audio drama: build distinct personalities quickly.

-

Localization and dubbing workflows: translate and dub video while keeping tone and timing.

These tools export standard audio formats like MP3 and WAV, and often integrate subtitle and timing data for tight lip-sync and dubbing. For creators, that means faster production, lower cost, and easier multilingual releases without losing character or clarity.

Why DupDub: Features that matter for animation and storytelling

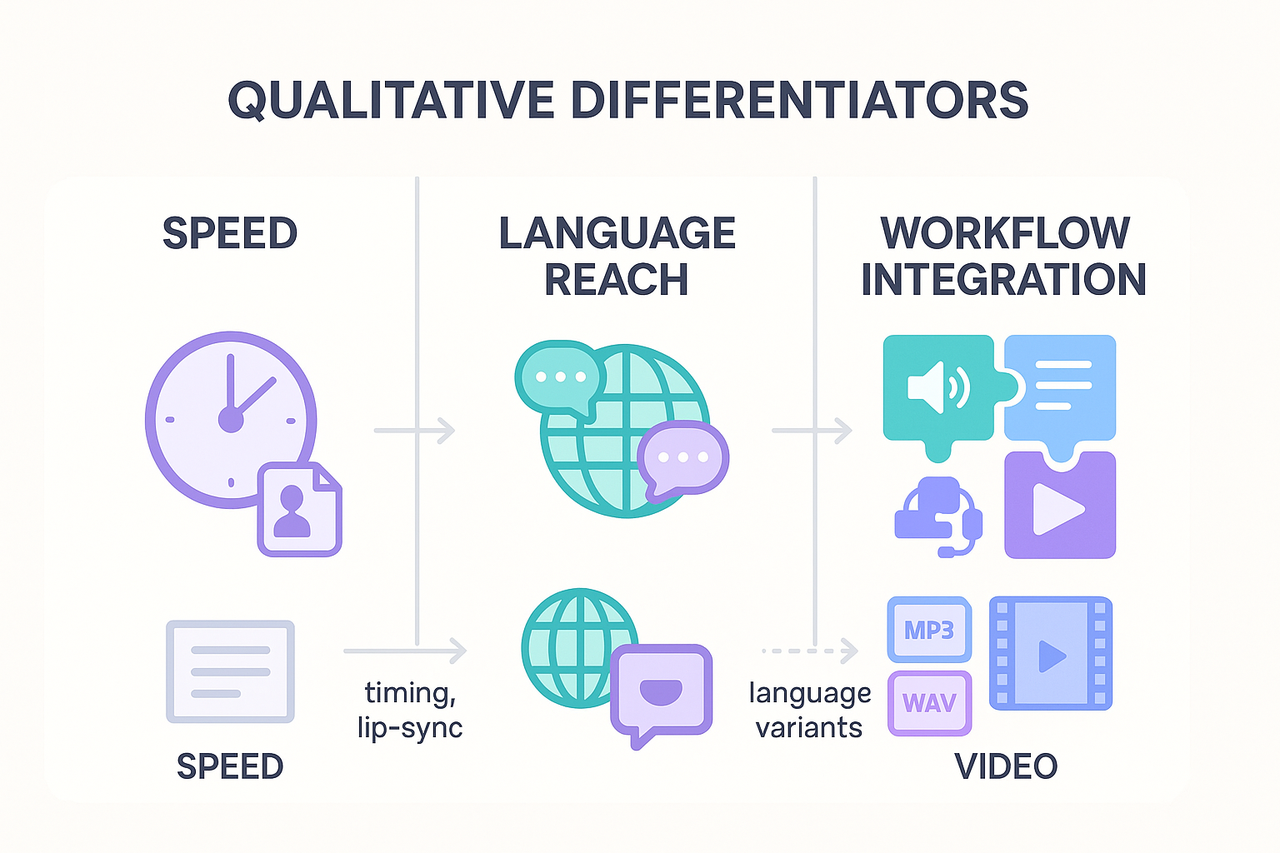

AI anime voice generator workflows need speed, variety, and consistency.

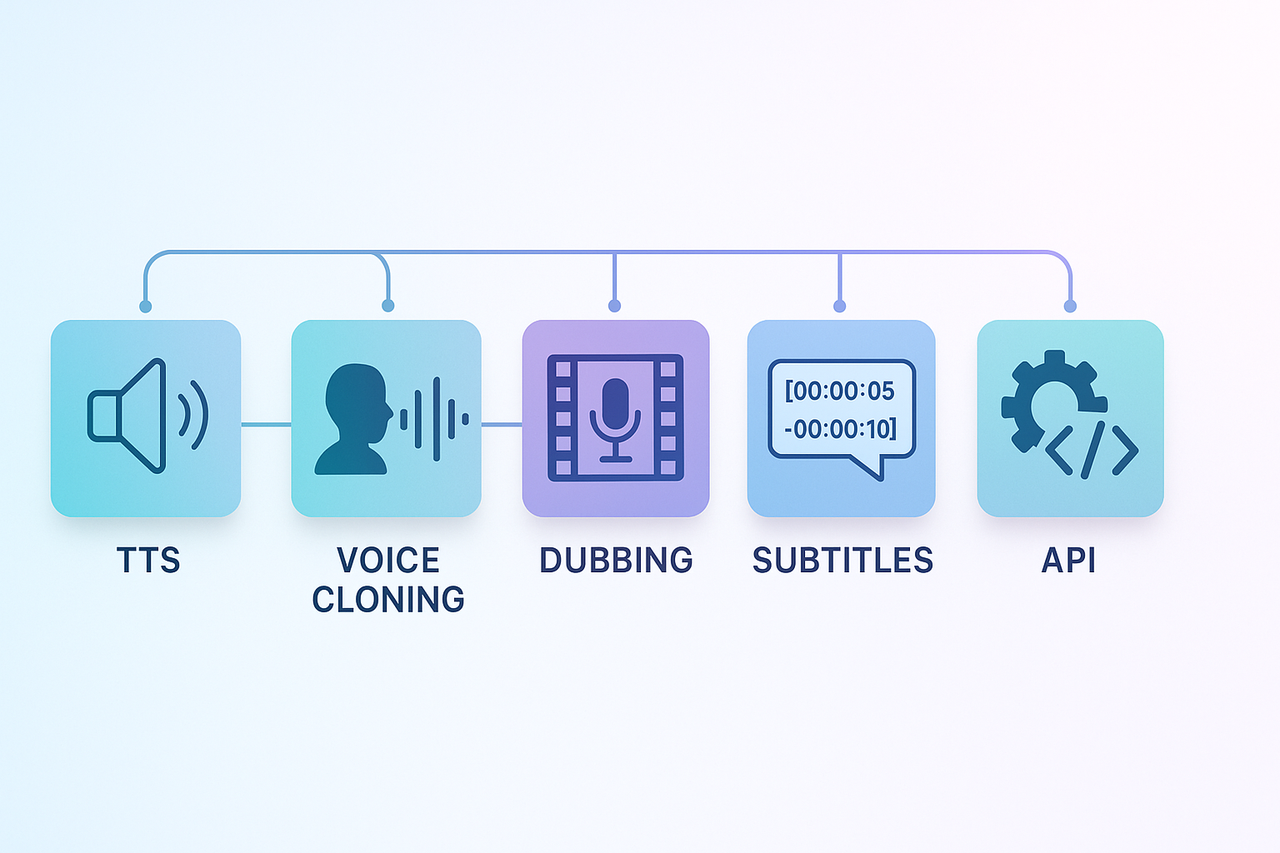

DupDub packs a huge voice and style library, cross-language cloning, and a one-stop dubbing and subtitle pipeline. That mix helps animators, visual novel devs, and storytellers move from script to synced audio fast.

Big voice library and fast cloning

The platform gives 700+ AI voices and 1,000+ styles, so you can pick anime tones, dramatic narrators, or quirky side characters. Voice cloning supports 47 languages, so you can recreate a cast voice or match a live actor for consistent characters. That saves casting time and keeps voice identity across episodes.

End-to-end dubbing, subtitles, and translation

Integrated tools handle TTS, speech-to-text, subtitle alignment, and video translation in one flow. Export SRT and MP4 with voice and captions in the same session, so lips, lines, and on-screen text all match. Key benefits:

-

One file upload, multiple language outputs

-

Aligned subtitles and dubbing, no separate editors

-

Exports ready for use in video timelines

Real-time generation, API, and production scale

Low-latency generation lets you iterate quickly during direction. API access automates batch dubbing and content pipelines for series or courseware. The platform scales from single creators to studios, so you don’t need separate tools as projects grow.

Why a unified workflow saves time

Keeping TTS, cloning, dubbing, and SRT export under one roof cuts handoffs and rework. You maintain consistent tone and timing across episodes, languages, and marketing clips. In short, you spend less time stitching tools and more time directing performances and refining story delivery.

Step-by-step: Create an anime character or storytelling voice with DupDub

Brief: This hands-on walkthrough shows how to pick a base voice or upload a clone sample, tune style and timing, add lip-sync or a talking photo, and export audio and subtitle files. Follow these steps to make a believable anime character or a narrative voice for video, visual novels, podcasts, or eLearning.

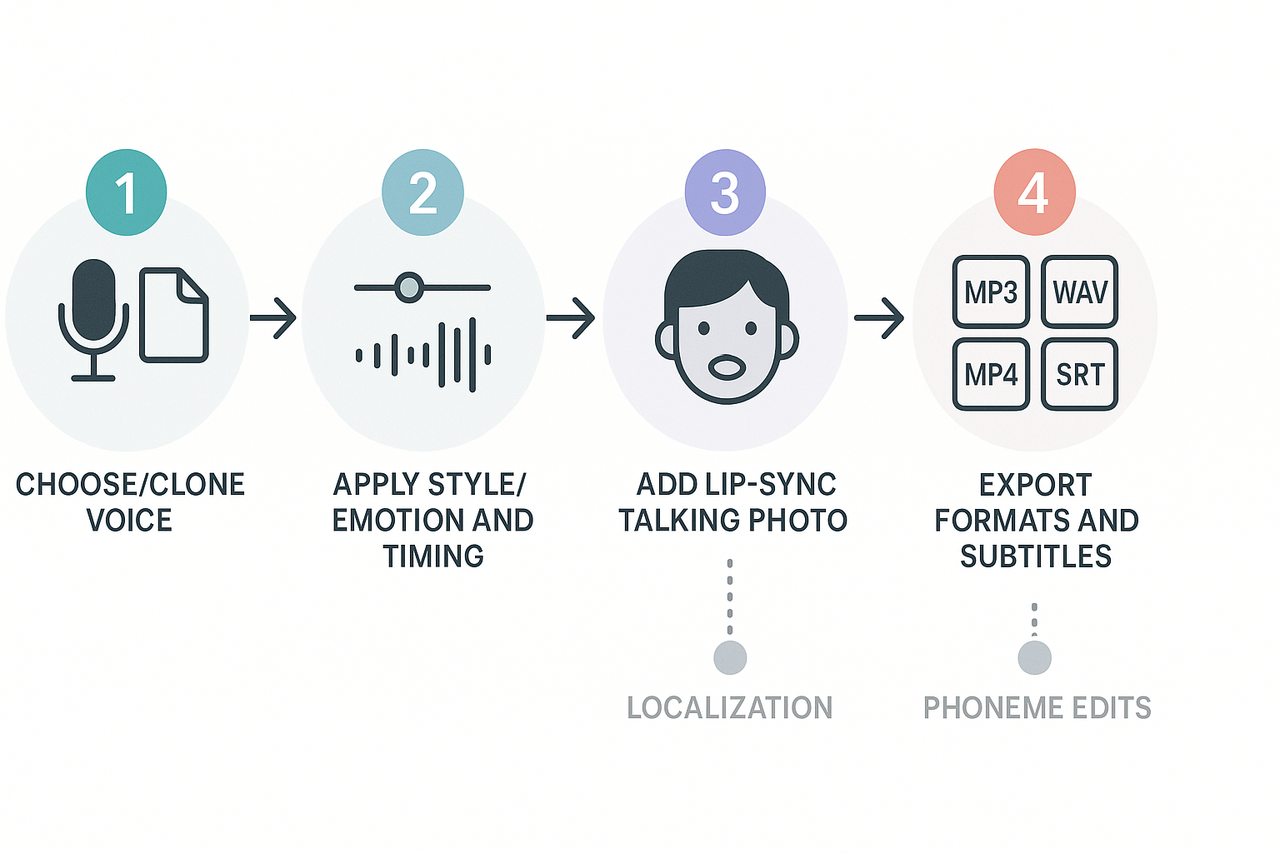

1) Choose a base voice or upload a clone

Open the platform and browse the voice library. Pick a base that matches age, pitch and energy. Or upload a 30 to 60 second clean sample to create a cloned voice. Shorter clips can work, but longer, consistent recordings give more natural results. Name the clone and save it to a project folder so you can reuse it across episodes.

2) Apply style, emotion and delivery

Enter the voice editor and pick a style preset like playful, stoic, or dramatic. Move emotion sliders to control intensity, and set tone for breathiness or grit. Use the preview button on 2 to 4 second phrases. Tweak until the character’s voice fits the scene, then save the style as a preset.

3) Adjust timing, breaths and phonetics

Open the phrase or timeline editor. Shift phrase timing to match scene beats. Add breaths or mouth clicks to sell realism. For names or made-up words, edit phonetic spellings so pronunciation is accurate. Small timing shifts often change how emotional a line feels.

4) Add lip-sync or a talking photo

Upload character art or video. Use the talking photo tool to generate lip and facial movement. Drag the audio clip onto the visual track, then nudge frames to fix lip flaps. Export a low resolution MP4 proof to confirm sync before final render.

5) Export audio, video and subtitles

Export MP3 or WAV for standalone voiceovers. Export MP4 when you want a combined video file. Generate SRT for subtitles and use subtitle alignment to tighten timestamps. For localization, duplicate the timeline, swap voices, and regenerate SRT in the target language.

Quick release checklist

• Confirm consent and commercial rights for any cloned voice. • Normalize audio loudness for your target platform. • Run a subtitle sweep for timing and typos. • Test final files on headphones and mobile speakers.

Voice cloning, ethics & legal checklist

This section covers must-have rules for cloning voices for animation and story work. If you use an ai anime voice generator, get consent early and keep records. Follow the checklist below to reduce legal risk and stay ethically sound.

Get clear consent

Always get written consent from the speaker before cloning a voice. Verify identity, record the consent date, and state permitted uses, territories, and duration. For example, the U.S. Copyright Office recommends the enactment of a federal digital replica right to protect individuals from unauthorized digital replicas of their likeness, including voice, for commercial purposes

Copyright and Artificial Intelligence.

Copyable legal checklist

-

Written release signed by the speaker, with date and ID method

-

Explicit commercial license clause (ads, games, streaming)

-

Limits on edits, endorsements, and sensitive uses

-

Retain consent files and generation logs for 3+ years

-

Verify minors or third-party likeness rights separately

-

Use watermarks or metadata where possible to tag AI audio

-

Have a takedown and dispute contact and response SLA

DupDub notes: only original speakers may be uploaded for cloning, and voice data is encrypted and not used for third-party training. Keep consent records linked to each cloned voice to lower takedown risk.

Pricing & comparison: DupDub vs. competitors

Brief: Compare pricing models, credit systems, and gated features so creators can pick the right plan for their volume and use case. Cover credit mechanics, which features are limited by tier, and when to choose DupDub versus AudioModify or another vendor.

If you’re choosing an ai anime voice generator for serial dubbing or episodic narration, pricing affects how fast you scale. DupDub uses a credit-based system with tiered subscriptions and a short free trial, while competitors often mix per-minute or flat-subscription billing.

Demand is rising: The global Text-to-Speech market was valued at USD 4.0 billion in 2024 and is anticipated to reach USD 7.6 billion in 2029 at a CAGR of 13.7% during the forecast period, according to

MarketsandMarkets.

How credit-based pricing works

-

Credits buy minutes, clones, avatars, dubbing, and exports. One credit equals a small unit of processing, not a minute of audio. Use more credits for high-quality voices, cloning, or multi-language dubbing.

-

Higher tiers unlock more clones, API access, faster processing, and priority support. Lower tiers cap cloning and slow batch jobs.

Quick comparison table

|

What matters

|

DupDub (credit-based)

|

AudioModify / others (typical)

|

|

Free trial

|

Short, with starter credits

|

Varies, often limited samples

|

|

Cloning limits

|

Included by plan, more clones at higher tiers

|

Often pay-per-clone or enterprise-only

|

|

API access

|

Available on higher tiers

|

May require enterprise plan

|

|

Priority support

|

Top tier

|

Usually enterprise only

|

Choose the platform if:

-

You need integrated video, subtitles, and translation in one workflow, pick the credit-based option.

-

You want simple per-minute pricing for occasional work, consider minute-based competitors.

-

You need heavy cloning, API automation, or fast turnaround, prefer a higher-tier credit plan.

Real-world mini case studies & user testimonials

Brief: Three short, concrete examples show how creators use an ai anime voice generator for real projects. Read quick outcomes, measured time or cost savings, two creator quotes, and links to downloadable sample clips hosted with the article.

Indie animator: rapid character voicing

An indie animator used DupDub to voice seven characters for a five-minute pilot. They cut production time from three days to four hours by generating and iterating voices in one session. Outcome: saved roughly 80% of voiceover time and avoided hiring multiple actors.

Localization team: dubbing into 10 languages

A small localization squad translated and dubbed a 12-minute short into 10 languages. The tool produced synced audio and subtitles in under two days, versus an estimated two weeks with studio work. Outcome: faster global release and 60% lower per-language cost.

Educator: consistent course narration

An online course creator cloned a single narrator voice for a 40-lesson series. The clone kept tone and pacing consistent across 20 hours of content. Outcome: saved hiring fees and sped up production by half.

Creator quotes

“It felt like having a full casting room in my browser.” — Maya, indie animator “Delivering 10 languages in days changed our release plan.” — Luis, localization lead

These mini case studies show practical wins: speed, language reach, and consistent voice branding for animation and storytelling projects.

Practical tips, troubleshooting & pro techniques

Quick, hands-on tips to make AI audio feel real and keep projects on budget. This section covers voice naturalness, using SSML and style presets, fixing lip-sync and subtitle drift, and when to add human passes for the best results. It’s aimed at animators and storytellers who want polished, production-ready audio.

Improve naturalness fast

-

Record short emotional references and pick a matching style. Small changes in pacing and breath sound real.

-

Add brief pauses and punctuation in text to guide phrasing. That helps character and flow.

-

Layer subtle room tone under the AI audio to match your scene.

Use SSML and style presets

SSML (Speech Synthesis Markup Language) controls pitch, rate, and pauses. Use it to add emphasis and timing. Try style presets first, then tweak SSML for fine control.

Fix sync and subtitle issues

-

Export SRT and compare timestamps to your video track.

-

Nudge audio by 50–200 ms to fix lip-sync drift.

-

If translation changes length, tighten phrasing or split lines to keep eye sync.

Manage credits and costs

Batch similar lines to reuse styles and reduce per-clip overhead. Use low-quality drafts for reviews, then render final files at high quality.

Human-in-the-loop and blending

Use AI for pass one, then have an actor re-record tricky lines. Blend AI and human takes for consistent character timbre and fast-turn localization. Small human edits lift emotion and clear legal risk when using cloned voices.

FAQ — common questions

-

How accurate is voice cloning for ai anime voice generator results?

It can be very close for tone and timing, but accuracy depends on sample quality and length. Provide 30 to 60 seconds of clean, emotion-rich audio for the best match. Expect small differences in prosody with extreme or highly stylized character voices.

-

Can I use cloned voices for commercial storytelling voice projects?

Yes, but only with the proper rights: either you recorded the source speaker or you have written permission. Read the platform terms for commercial use and keep consent records for licensing checks.

-

What file formats and integrations support anime and storytelling voice over workflows?

Exports typically include MP3 and WAV for audio, MP4 for video, and SRT for subtitles. Common integrations are APIs, Canva, and YouTube plugins. - Audio: MP3, WAV - Video: MP4 - Subtitles: SRT - Integrations: API, Canva, YouTube plugin

-

How do I start a free trial and test a storytelling voice ai?

Sign up with email and verify your account, then use trial credits to generate voices and clones. For example, DupDub provides a 3-day free trial with 10 credits to test cloning and dubbing.

-

What privacy, support, and data retention rules should I check?

Look for voice data encryption, a clear no-third-party-training policy, and controls to delete uploaded voices. Use support chat or email if you need help or a data removal request.

-

How do I get the best quality anime-style or storytelling voice?

Write short, directed lines, note emotion and pacing, and record multiple takes. Add light post processing like de-noise, EQ, and breath edits to make the voice feel natural.