TL;DR — Key takeaways on celebrity voice rights in the AI era

-

Which laws and claims matter for voice use, and how they differ by jurisdiction.

-

Practical contract clauses and consent workflows that reduce litigation risk.

-

A takedown and response playbook for suspected misuse.

-

How technical controls map to legal remedies (proof, limitation, and takedown).

-

Require written consent before cloning or commercializing any non-consented voice, and archive the file with metadata. (Proof matters.)

-

Add narrow license terms: allowed uses, duration, revocation, and indemnity. Keep one-sentence summaries for talent to sign quickly.

-

Turn on immutable audit logs and voice-locking in your production tools so you can demonstrate provenance and limit downstream use.

Voice as likeness: statutory and common-law protection

How voice claims differ from other legal tools

-

Copyright: protects original recordings and scripts, not a person’s vocal traits. You can infringe a recording without claiming a voice right, and vice versa.

-

Trademark: guards brand identifiers, like a name or logo. A voice can function as a brand only in narrow cases where consumers associate it with a source.

-

Privacy torts: focus on intrusion, public disclosure, or false light. Those can overlap, but they address private harms rather than commercial misappropriation.

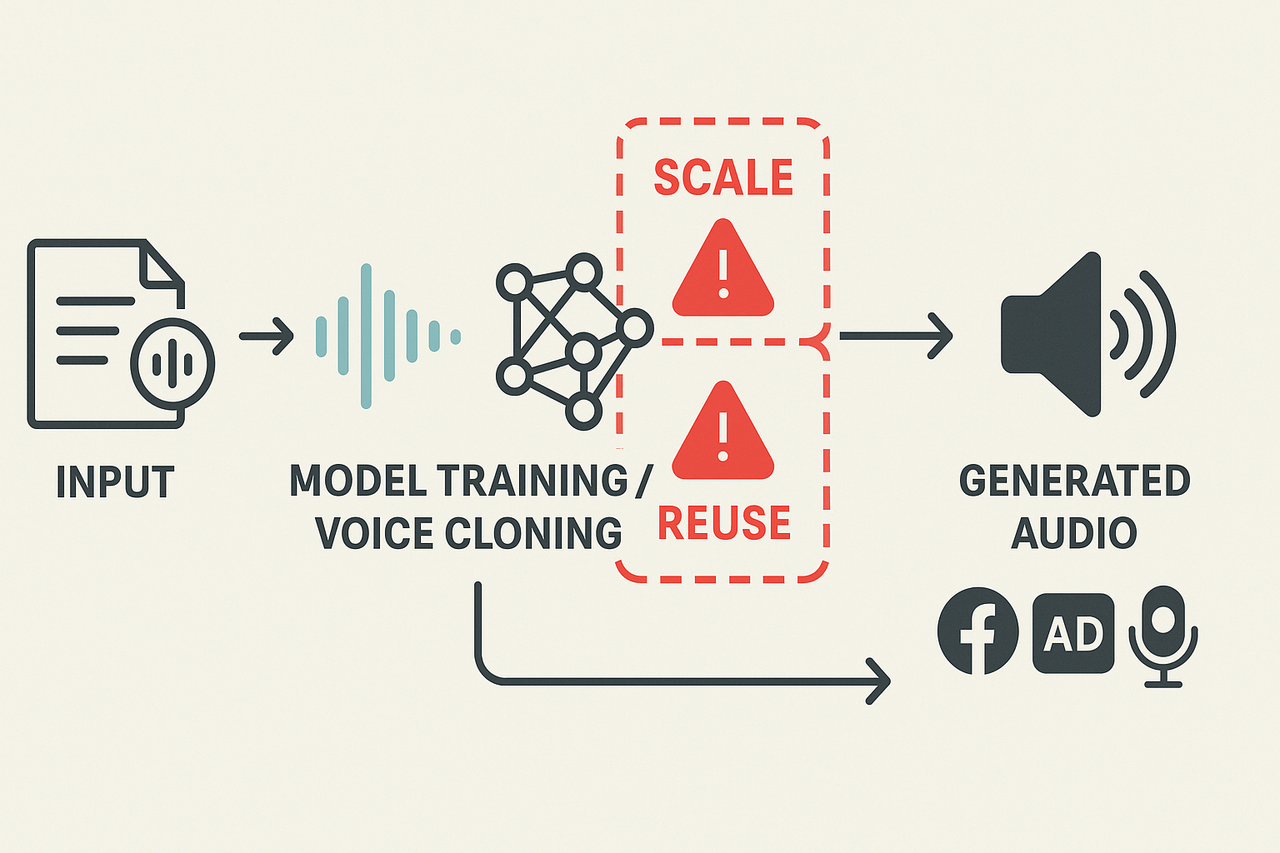

Why AI voice cloning changes the legal and practical landscape

How modern voice synthesis works

-

Advertising that implies an endorsement without consent.

-

Impersonation for fraud or social engineering.

-

Political deepfakes that spread disinformation.

Why traditional clearance and takedown fall short

Legal frameworks: U.S. state law and a global comparison

U.S. snapshot: patchwork of statutes and common law

-

Who holds the right: some states let estates enforce post-mortem rights. Durations vary by state.

-

What counts: image and name are nearly always protected; voice and mannerisms may be explicit or litigated.

-

Remedies: courts grant injunctions, disgorgement of profits, statutory damages in some statutes, and punitive awards in rare cases.

Global comparison: no single model

-

United Kingdom: No single statutory right of publicity. Claims usually use passing off, privacy, or copyright in specific works. Courts have been cautious about a broad publicity right.

-

European Union: No harmonized right of publicity. Individual member states mix personality, image, and data protection rules. Watch the EU AI Act for tech-specific rules.

-

Canada: Personality protection depends on the province and common law torts. Commercial use claims succeed irregularly.

-

Australia: No broad statutory publicity right. Claims often use misleading conduct or privacy-based causes of action.

-

India: Courts haven’t recognized a unified publicity statute. Rights arise from a mix of personality, copyright, and unfair competition claims.

What to monitor

-

State bills that add voice to publicity statutes, or that limit AI cloning without consent.

-

EU-level AI rules and national implementations that affect biometric or voice processing.

-

High-court decisions that clarify whether voice equals identity in common-law states.

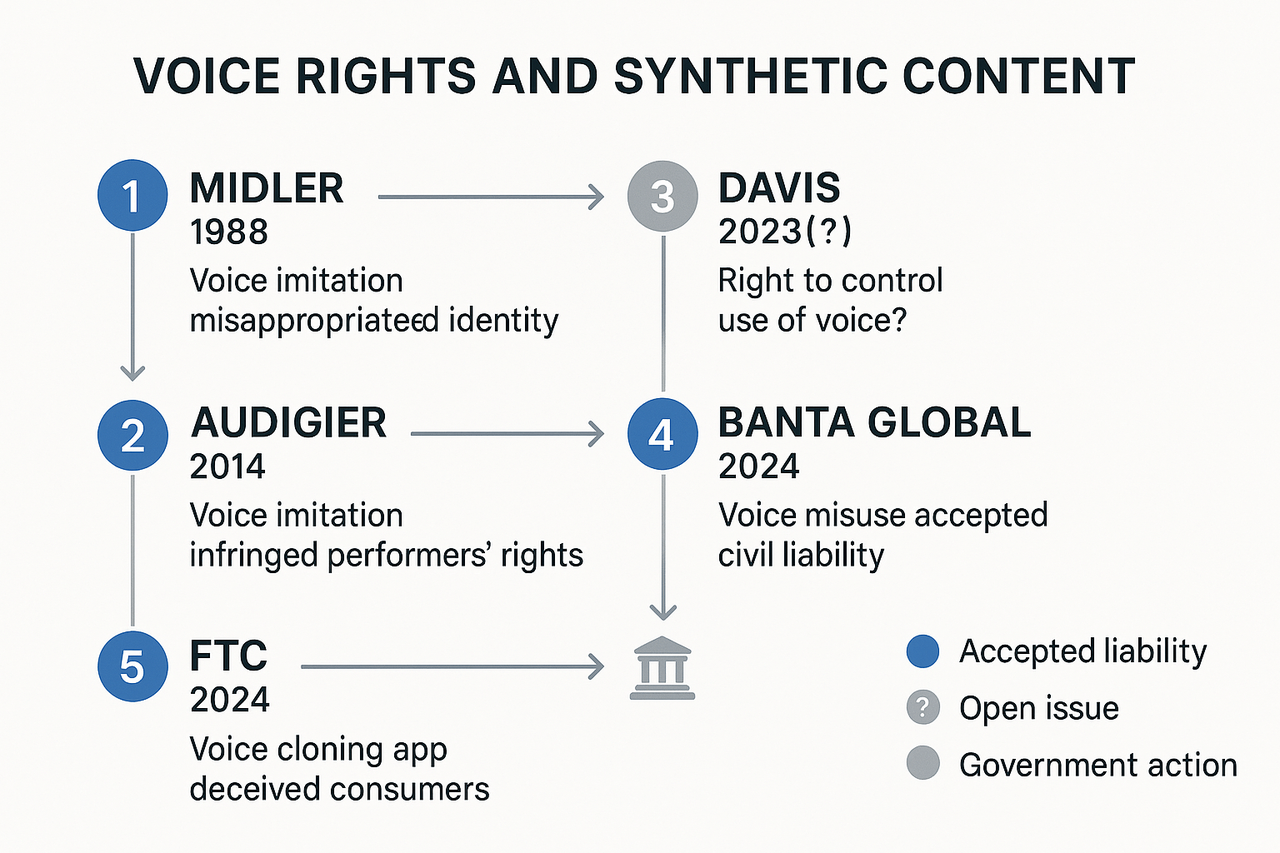

High-profile cases & precedents (what courts have said so far)

Key U.S. decisions

Notable international rulings and government actions

Practical takeaways for creators and platforms

-

Assume voice imitation can trigger publicity claims in many U.S. states.

-

Get written licenses for any identifiable voice, and log consent.

-

Use moderation and detection tools, because courts may consult platform practices when assessing liability.

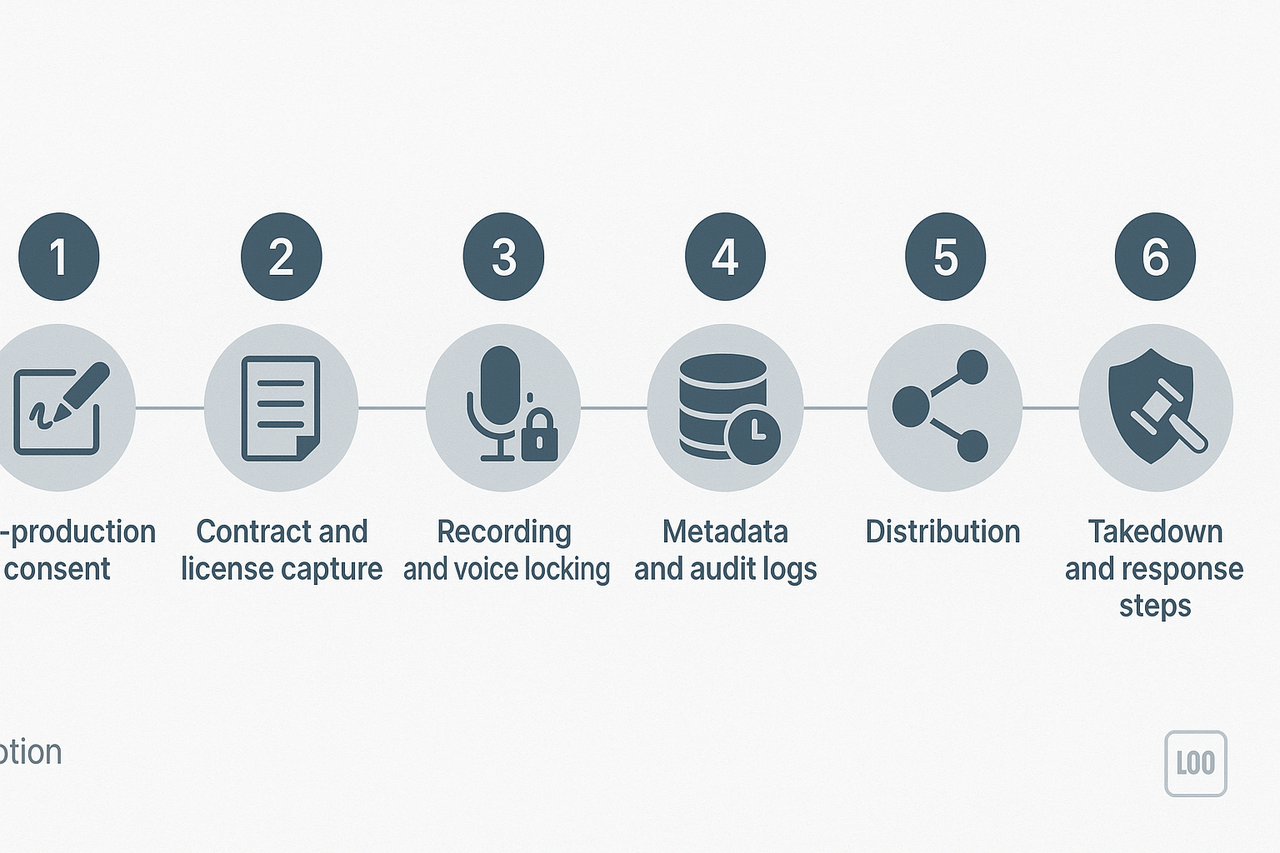

Practical compliance checklist for creators, producers, and platforms

Pre-production: get clear consent and releases

-

Obtain written, time-stamped consent that names permitted uses and languages. Keep originals safe.

-

Use a model release plus an AI-specific license clause (scope, territory, duration, exclusivity). Spell out dubbing, cloning, and translation rights.

-

Record baseline voice samples and a video of the recording session for proof of identity and voluntariness.

-

Require proof of authority for estates or agents when talent is represented.

Contracts, licensing models, and negotiation tips

-

Common models: per-use license, time-limited term, revenue share, and exclusive buyouts. Pick what matches your risk profile.

-

Fee norms: day rates, per-minute royalties, or one-time buyouts. Anchor offers to the intended use, scale, and exclusivity.

-

Negotiation tips: cap downstream sublicenses, add reversion on breach, require approval rights for voice alteration, and include indemnity for unauthorized uses.

Recordkeeping and audit trails

-

Log consent in editable and immutable formats (PDF with timestamp, blockchain hash, or secure audit log).

-

Save raw recordings, project metadata, and export hashes. Keep retention policy and access controls.

Post-release: takedown and rapid response workflow

-

Preserve evidence and capture URLs and timestamps.

-

Notify internal legal and compliance.

-

Issue a cease-and-desist to the host, with proof of infringement.

-

Submit platform takedown or DMCA notice where applicable.

-

Offer mitigation (credit, correction, or paid license) if appropriate.

-

Escalate to litigation counsel if takedown fails.

Technical defenses, detection, and best practices mapped to legal remedies

Watermarking and provenance metadata: prove origin and timing

Voice-locking and consent verification: prevent reuse

Detection signals and limits: what courts will and will not accept

Engineering to meet legal and evidentiary needs

-

Log immutable hashes, timestamps, and user IDs for every generation.

-

Store signed consent artifacts alongside cloned-model metadata.

-

Embed robust watermarks that survive common transforms.

-

Export tamper-evident forensic packages for legal teams.

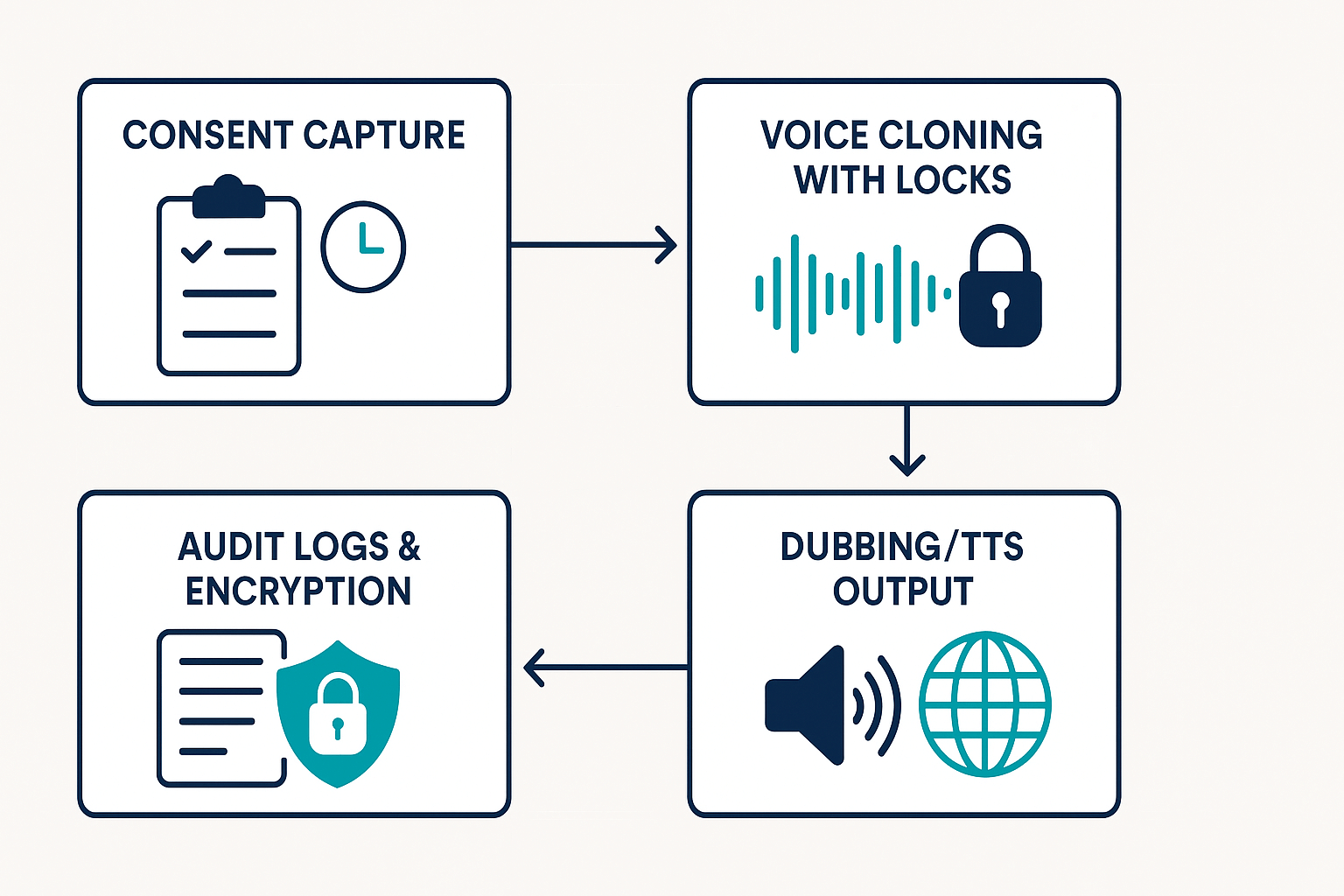

How DupDub supports compliant voice use (product-policy alignment)

Map product features to legal requirements

-

Consent capture: Time-stamped consent records, scope fields (use case, territory, duration), and downloadable consent artifacts to prove authorization. This supports rights-of-publicity and privacy notice requirements.

-

Locked voice clones: Cloned voices can be locked (export disabled) and tied to a speaker ID, limiting re-use without renewed permission. That helps enforce the contracted scope and reduce misuse risk.

-

Encrypted processing and storage: Encryption in transit and at rest helps meet data protection obligations and reduces risk of unauthorized access to voice biometrics.

-

Audit logs and provenance: Immutable logs record creation, edits, access, and export events to support takedown responses and litigation-ready evidence.

Enterprise configuration examples

-

Consent capture: Hosted consent form or API-driven consent token. Store signer name, IP, timestamp, consent scope, and signed file (PDF/JSON).

-

Locked cloned voices: Set clone to "locked" on creation, disable download and external export, and require explicit admin approval to unlock.

-

Access controls: SSO and SAML, role-based access control (RBAC), least-privilege roles for cloning and export.

-

Retention and legal hold: Configurable retention windows for raw audio and consent records; legal hold toggle to preserve data beyond normal retention.

-

Audit exports: Exportable CSV/JSON logs for compliance review and eDiscovery.

Quick compliance alignment checklist

-

Capture explicit, auditable consent.

-

Limit clones with technical locks.

-

Log all actions for provenance.

-

Enforce access, retention, and legal hold policies.

FAQ — common questions about celebrity voice rights and AI voice tools

-

Can I legally clone a celebrity voice for a project? (legally clone a celebrity voice)

Short answer: usually no, without clear, written consent. Celebrity voice rights are part of rights of publicity, and many states bar commercial use of a recognizable voice. Next steps: stop if you have already made content, consult the Practical compliance checklist, and get a signed license from the talent.

-

What if my voice were cloned without consent? What can I do? (My voice was cloned without consent)

You can pursue takedown, DMCA (if hosted online), and state law claims for misappropriation. Start by preserving evidence, asking the host for removal, and following the takedown steps in the checklist. If the platform stalls, contact counsel and notify platform compliance via the How DupDub supports compliant voice use section.

-

How do platform policies interact with the law on celebrity voice use? (platform policies and celebrity voice law)

Platforms add rules on top of the law, meaning content can be removed even if not illegal. Always read host rules and keep records of permissions. Use platform policy checks in your pre-publish review.

-

What immediate steps help stop harm from a cloned voice? (takedown workflow for cloned voice)

Quick checklist: preserve files, request removal from the platform, issue a DMCA or state-law notice, and notify your provider. See the step-by-step takedown workflow in the Practical compliance checklist for creators.

-

When should I involve counsel for voice cloning compliance? (When to involve counsel for voice cloning)

Call counsel before commercial use, when a dispute starts, or if a celebrity claim appears likely. Counsel helps with licensing, takedown responses, and settlement strategy.