TL;DR — What this guide gives you

This guide is a compact playbook for teams that need fast, reliable cut-scene localization. It lays out the core checkpoints, the main technical risks, and where to add AI-assisted tools to speed up workflows. It’s written for developers, localization managers, and producers working on game dubbing.

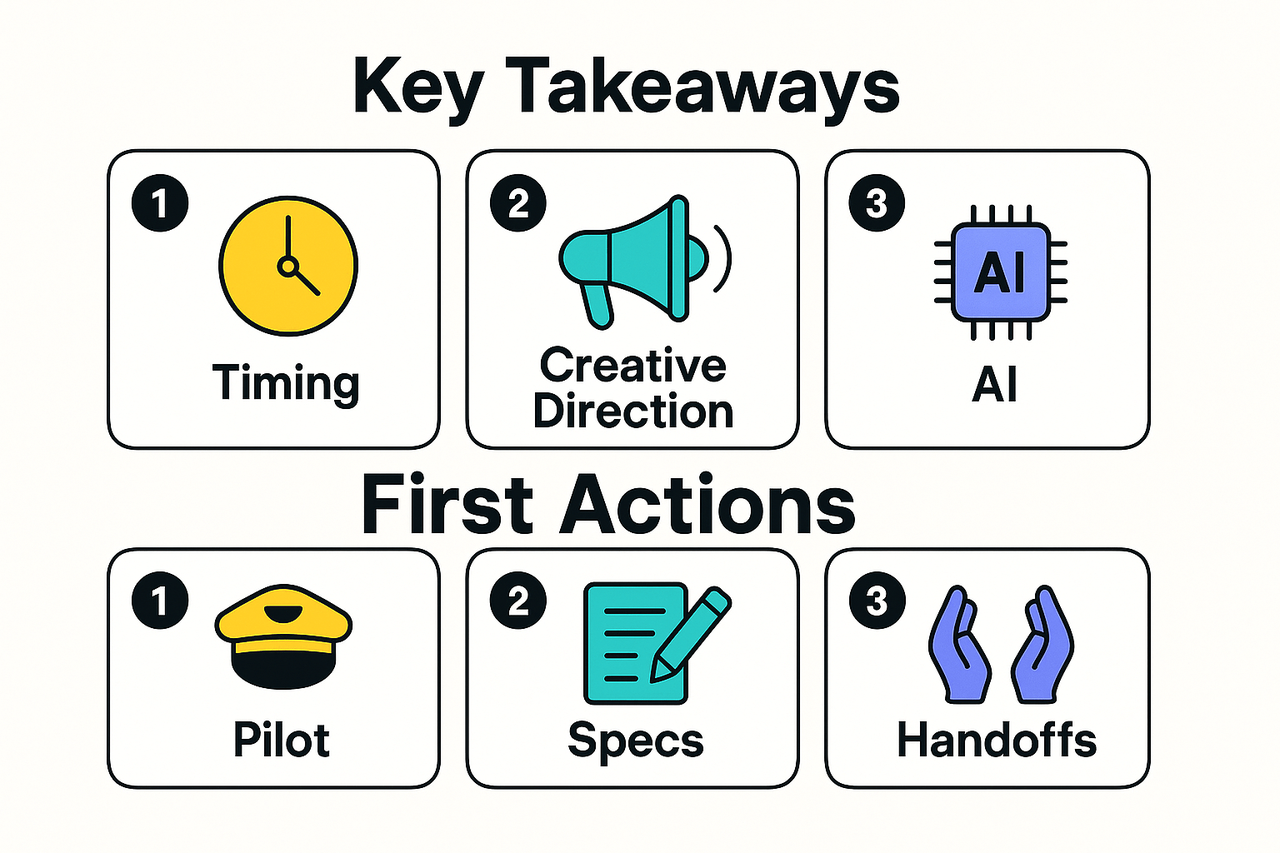

Key takeaways:

-

Plan early for lip-sync (phoneme timing), file formats, and engine integration to avoid rework.

-

Balance creative direction with technical constraints using clear voice specs and QA gates.

-

Use AI tools to cut time on translation, rough dubs, and alignment, but keep human review for performance and cultural nuance.

First actions to take:

-

Run a 1-level pilot: localize 1 high-value cut-scene to test timing, SFX, and subtitles.

-

Create a short voice spec sheet and QA checklist for actors and engineers.

-

Map handoffs: who delivers scripts, who aligns audio, and who signs off on final builds.

Why cut-scene dubbing matters for player experience and global reach

Good cut-scene dubbing shapes how players feel and follow a story. Game dubbing must match tone, timing, and character, or it snaps players out of a scene. Players often prefer native-language audio for immersion, but only when the performance and sync are convincing.

How dubbing affects players

Dubbing can lift immersion or break it. When voices match mouth movement and acting, players stay focused on plot and emotion. Bad timing, flat delivery, or inconsistent character voices pull attention to the audio, not the story.

Key player impacts:

-

Emotional fidelity: natural delivery keeps scenes powerful and believable.

-

Narrative continuity: accurate timing and lip-sync preserve pacing.

-

Accessibility: localized audio reaches non-readers and casual players.

Why localization quality drives commercial reach

Poor dubbing hurts retention and conversions. Players often abandon games after a weak first hour, and shallow or mismatched dubbing speeds that loss. For publishers, that means lower engagement, weaker reviews, and smaller regional revenue.

Practical takeaway: prioritize casting, timing, and QA. Test dubbed cut-scenes with native speakers early, and measure drop-off in localized builds. Small fixes in voice matching and sync yield big gains in player trust and lifetime engagement.

A brief evolution: from traditional dubbing to AI-assisted game dubbing — and when to choose which

Game dubbing has moved fast. It started with actors in booths, then moved to careful manual alignment, and now it often uses text-to-speech and voice cloning. This short history shows how quality, speed, and creative control trade off, so teams can pick the right approach for each project phase.

Studio ADR and manual alignment: precision and human nuance

Traditional dubbing used ADR (automated dialogue replacement) in a studio. Actors watched footage and re-recorded lines to match lip movement and emotion. Engineers then hand-aligned audio to frames and tweaked timing, which gave high fidelity and natural performance. The downside was cost and time: booking talent, studio hours, and post work adds up.

AI tools: TTS, voice cloning, and automated sync

Recent tools generate voices from text, clone actors from short samples, and align audio automatically. These tools cut turnaround time from weeks to hours for many cut-scenes. They handle batch localization in dozens of languages, and they scale without dozens of studio days. But synthetic voices can still miss subtle breaths, micro-timing, or local acting choices.

Quick comparison: quality, speed, and control

|

Workflow

|

Quality

|

Speed

|

Creative control

|

Typical cost

|

|

Traditional ADR

|

Very high (natural actor)

|

Slow (weeks)

|

Full control (direction)

|

High

|

|

AI-only dubbing

|

Good to very good

|

Fast (hours to days)

|

Medium (editing tools)

|

Low to medium

|

|

Hybrid (AI + human)

|

High

|

Medium

|

High (human polish)

|

Medium

|

Each row shows the common tradeoffs. Traditional gives the best acting and nuance. AI gives scale and low cost. Hybrid blends both, letting teams hit the sweet spot.

When to pick traditional, AI, or hybrid

-

Use traditional ADR when a scene needs star performances, subtle emotion, or sync with live-action faces. Big-budget cinematic scenes fit here.

-

Use AI-only for rapid prototyping, early build localization, or large catalogs of short cinematics where costs must stay low. It’s ideal for playtests and global QA.

-

Use hybrid when you want fast localization with human polish: generate a pass with AI, then hire actors or voice directors to re-record key lines or tweak timing. This saves time and keeps quality.

Choose by phase: prototype and QA lean AI, final cinematic passes favor traditional or hybrid. Also weigh language mix: low-demand languages often fit AI-first workflows, while major markets may justify studio work.

AI platforms are production-ready now, and some, like DupDub, offer voice cloning, automated alignment, and multi-language exports to speed localization while preserving control. The practical choice depends on your budget, timeline, and how critical those cut-scenes are to player experience.

Common challenges & pitfalls in cut-scene dubbing (and how to avoid them)

Cut-scene dubbing can make or break a scene. Good game dubbing keeps players in the moment, but small problems quickly break immersion. This section lists recurring technical, creative, and operational pitfalls and gives clear fixes you can apply today.

Top pitfalls and practical fixes

-

Lip-sync drift (phoneme timing slippage): actors’ mouths move out of sync with audio. Fix it by baking phoneme-aligned timing into your pipeline and using frame-accurate markers for each line. Validate alignment in-engine early, not at final QA.

-

Cultural mistranslation: jokes, idioms, or references fall flat. Avoid this by using localizers, not only translators, and by running cultural checks with a native reviewer. Keep fallback notes for lines that must remain literal.

-

Loss of performance nuance: AI or poor direction flattens emotion. Preserve intent by supplying reference takes, emotion tags, and short direction notes (tempo, emphasis, subtext). Use voice cloning only after voice-match tests with real actors.

-

Technical inconsistency across platforms: sample rates, codecs, or lip-sync tools differ. Standardize file specs (WAV 48 kHz, 24-bit) and enforce a single middleware export format. Automate conversions in CI to avoid manual resaves.

-

QA bottlenecks and rework loops: late discovery of issues kills deadlines. Build a staged QA checklist that includes in-engine spot checks, subtitle sync, and spot audio checks. Tag fixes by severity and assign someone to triage daily.

Legal and ethical guardrails

Governance matters: follow regional rules for AI voice use, licensing, and consent. For example, the EU

Regulation of artificial intelligence entered into force on 1 August 2024, creating a risk-based legal framework for AI systems. Lock voice-clone consent, log approvals, and store consent records with each asset.

Short checklist: run early sync tests, pick localizers over literal translators, keep human performers in the loop, and automate file standard checks. These steps cut rework and keep cut-scenes feeling native and cinematic.

Common challenges & pitfalls in cut-scene dubbing (and how to avoid them)

Cut scenes lose player immersion when technical, creative, or process gaps appear. This section names the common pitfalls in game dubbing and gives action-first fixes. Use these steps to cut rework and keep cinematic moments believable.

Lip-sync drift happens when audio timing slips from the animation. Lock phoneme-level timing (match sound units to mouth shapes) during SGS (source-graphics-sync) passes. Use viseme mapping and short timing stretches only when needed, and test in the engine at target frame rates.

Avoid cultural mistranslation with local reviewers

Literal translation can break character or humor. Build glossaries, tone guides, and a cultural notes doc for each locale. Run final lines past native reviewers and do a transcreation pass for jokes, idioms, and lore.

Preserve performance nuance, don’t replace it blindly

Automated voices can flatten emotion if used without care. For key characters, record reference performances and consider hybrid dubbing: synthetic for bulk, local actors for critical scenes. Use voice direction notes and A/B tests to verify emotional match.

Remove QA bottlenecks with staged checks

QA delays often come from late fixes and unclear responsibilities. Create staged checkpoints: script QA, pre-ADR dry runs, in-engine sync review, and final playthrough QA. Use a short checklist per pass so teams know what to sign off.

Prevent pipeline chaos with strict file and version rules

Missing or misnamed files stall builders and sound teams. Standardize formats (WAV 48k/24-bit for game audio), naming, and timecode. Automate exports and keep alternate takes in a labeled folder structure for fast rollback.

Apply these controls together: they cut rework, protect performance, and speed localization. Follow legal and consent best practices for voice rights and AI use in your contracts to lower risk.

Best practices for dubbing cinematic cut-scenes (step-by-step)

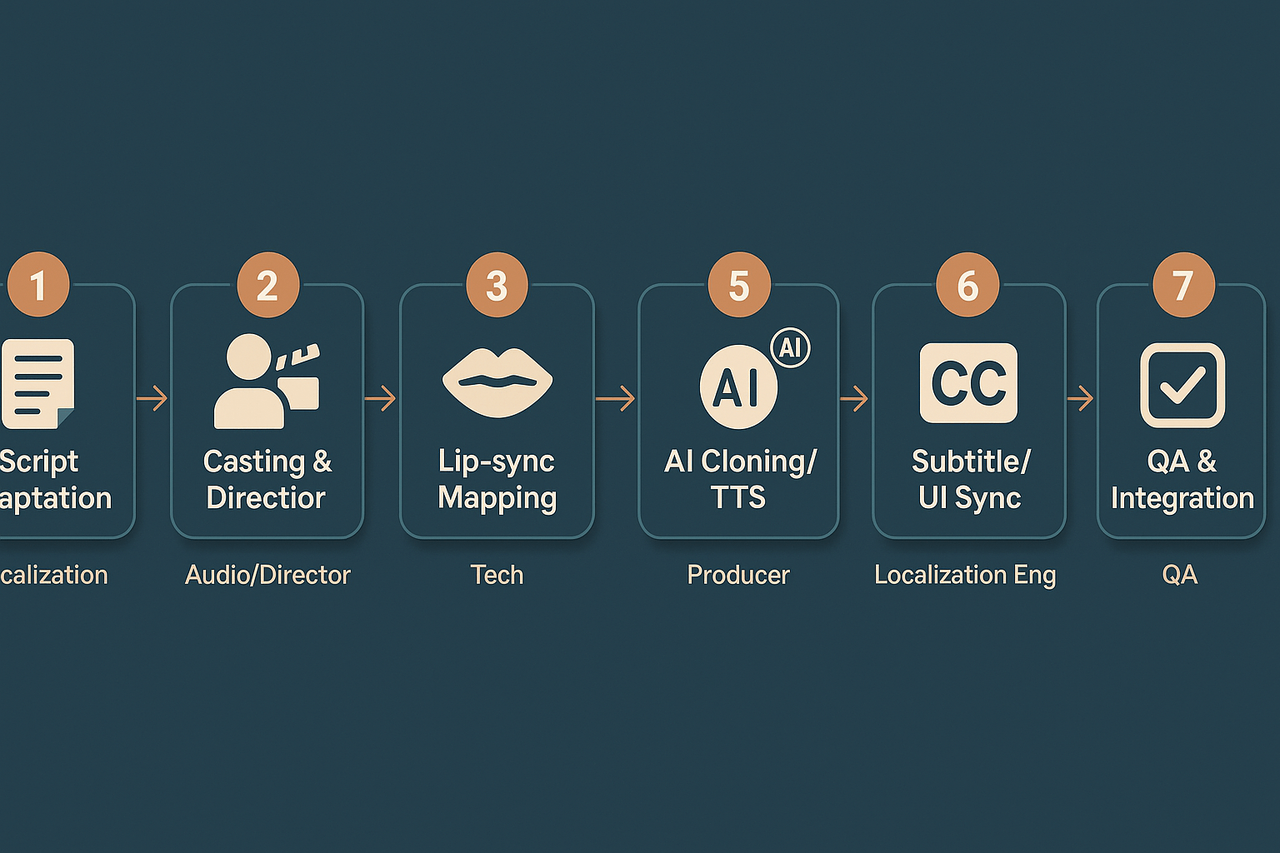

This step-by-step playbook gives teams a clear path from translated script to final in-engine assets. It shows who owns each task, what deliverables to expect, and where to use AI tools like DupDub to speed iteration while keeping creative control. Use it as a checklist while you plan your cut-scene localization for game dubbing.

Step 1: Adapt the script for timing and performance

Owner: Localization lead and narrative writer. What to deliver: Time-stamped adaptation, line-level notes, and SRT draft. Why it matters: A literal translation rarely fits lip-sync or UI space. Localizers must shorten or expand lines to match timing and context. Start with a time-stamped script so audio teams know exact cue points. Use DupDub to create quick translated drafts and auto-align lines to the original timing for review.

Step 2: Prepare casting and direction briefs

Owner: Audio producer and voice director. What to deliver: Casting slate, direction brief, reference voice clips, paid talent contract templates. Why it matters: Clear direction saves re-records. Specify tone, intensity, age, and pacing per line. Use AI voice demos to audition choices quickly. DupDub can generate demo voices or clones from a short sample, letting directors audition in target languages before hiring.

Step 3: Map phonemes and plan lip-sync

Owner: Technical audio lead and animation engineer. What to deliver: Phoneme-to-viseme map, timing CSV, optional FACS (Facial Action Coding System) notes. Why it matters: Lip-sync needs phoneme timing, not just subtitles. Create a mapping file that aligns source phonemes with target languages. Export phoneme timings from TTS or STT tools for accurate in-engine viseme triggers. DupDub can provide phoneme-aligned output for faster mapping and iteration.

Step 4: Use AI-assisted cloning and TTS for iterations

Owner: Localization producer and temp casting team. What to deliver: Voice-clone proofing files, multiple emotion takes, rough stems. Why it matters: AI lets you test performance and timing fast. Use synthetic demos to validate timing, emotion, and lip-sync before booking expensive studio time. Keep humans in the final loop: use DupDub for prototypes and client approvals, then replace or polish with human takes where needed.

Step 5: Tune emotion and pacing per take

Owner: Voice director and audio editor. What to deliver: Emotion map, preferred takes, mastered clips. Why it matters: Emotion drives player immersion. Annotate lines with intensity and pacing. Create short reference clips showing ideal deliveries. AI systems can generate variants to A/B test which emotions read best in context. Use those variants to guide voice actors and to speed approval.

Step 6: Sync subtitles, UI, and accessibility

Owner: Localization engineer and UX lead. What to deliver: Final SRT, in-game subtitle files, reading-speed notes, UI overflow tests. Why it matters: Subtitles must match spoken lines and on-screen UI space. Check reading speed and overflow on different resolutions. DupDub’s subtitle auto-generation and alignment tools can export SRTs that match final audio timings, cutting manual sync time.

Step 7: Run QA, iterate, and package deliverables

Owner: Localization QA lead and audio masterer. What to deliver: Final WAV stems, MP4 comps, phoneme timing CSV, SRT, and a completed Localization QA checklist. Why it matters: QA prevents costly rework. Test in-engine, watch lips, confirm line context, and validate UI fits. Use batch exports to produce comps for stakeholders. We recommend a downloadable Localization QA checklist for line-level checks and signoff.

Quick summary: who delivers what

-

Localization lead: adapted, time-stamped script, SRT draft.

-

Audio producer: casting slate, direction brief, temp voice files.

-

Tech audio/animation: phoneme-viseme map and timing CSV.

-

Voice director/editor: emotion map, selected takes, mastered stems.

-

QA lead: in-engine checks, bug list, final asset package.

Keep creative control by using AI as a drafting tool. Iterate fast with DupDub, but lock final performance with human direction or studio captures. A clear owner and a single QA checklist are the keys to shipping polished cinematic cut-scenes on time.

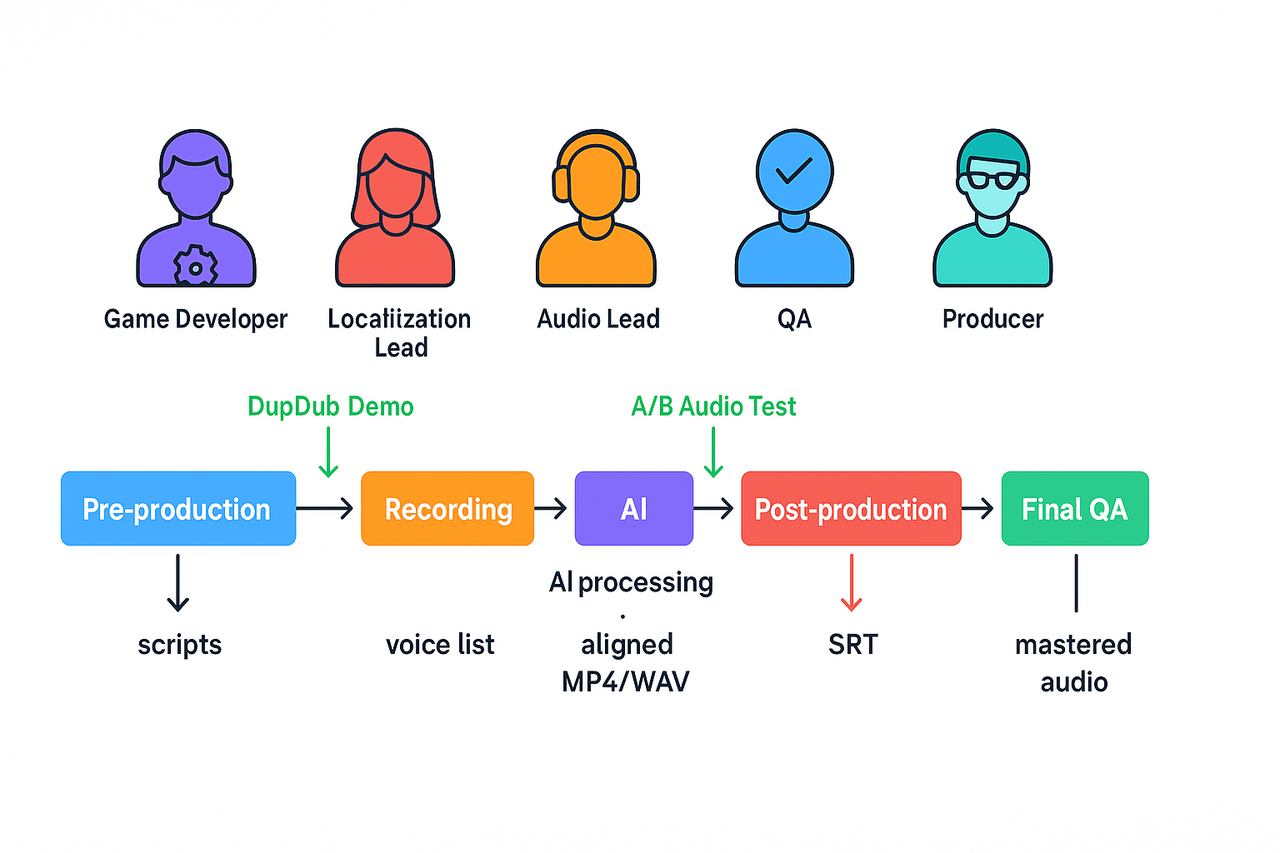

Implementation workflow: timelines, roles, and deliverables

This practical timeline maps pre-production to final QA so teams can ship dubbed cut-scenes on time. It covers milestones, review gates, and where to run DupDub demos and A/B audio samples. Use it to set clear deliverables for game dubbing and to avoid last-minute surprises.

Pre-production: plan, script, and assets

Set a firm script freeze date and lock shot lists. Confirm target languages, voice specs, and phoneme notes. Milestones:

-

Script localization ready, day 0. Deliverable: localized scripts and line IDs.

-

Voice sourcing complete, day 3. Deliverable: voice list and legal releases.

-

Temp audio pass, day 7. Deliverable: guide track for timing.

Recording and capture: record clean takes

Schedule sessions by language and time zone. Capture reference footage with clapper metadata and timecode. Review gates:

-

Approve first 10 minutes of recorded lines.

-

Run a DupDub demo on a small scene to test style and timing.

-

Embed A/B audio samples for stakeholder signoff.

AI-assisted processing: align, clone, and iterate

Upload raw audio and video to DupDub for alignment and voice cloning. Steps:

-

Auto-transcribe and generate phoneme timing.

-

Apply cloned or TTS voices and check lip-sync.

-

Export alternate takes for A/B testing. Deliverable: aligned WAV/MP4 and SRT files.

Post-production and final QA: polish and certify

Mix, add SFX, and master per platform loudness. Trigger CI-like QA checks: subtitle sync, keyword search, and automated lip-sync reports. Run test builds on target platforms and collect QA signoff before certification.

Roles and RACI snapshot

-

Producer: Responsible for schedule and approvals, Accountable for delivery.

-

Localization lead: Responsible for translation accuracy, Consulted on tone.

-

Audio lead: Responsible for capture and mix, Accountable for final audio.

-

Dev/Build engineer: Responsible for integration, Informed on releases.

-

QA: Responsible for runbook checks and bug reporting, Consulted on cert readiness.

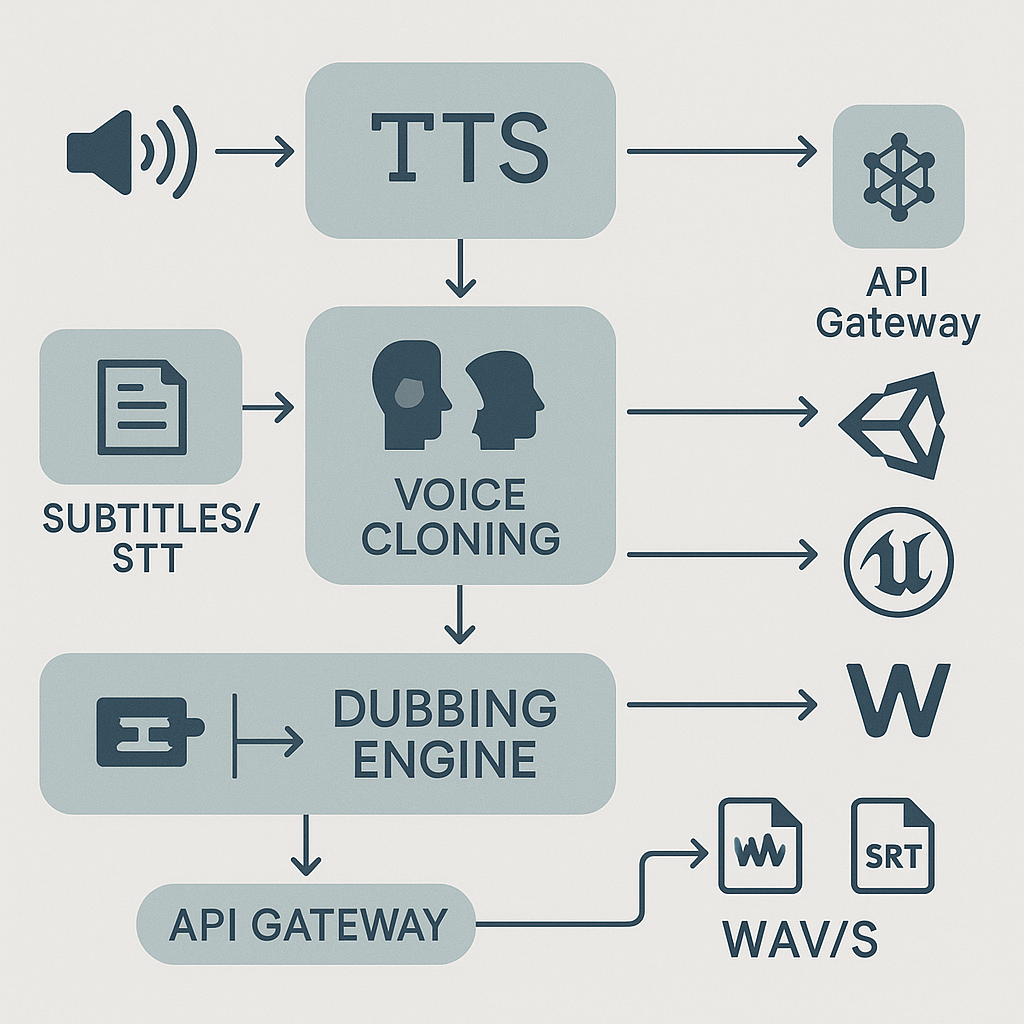

Technical setup: file formats, tools, and middleware for seamless integration

Start with sensible audio basics to avoid rework. For professional game dubbing, record and deliver 48 kHz files with 24-bit depth where possible. Use consistent naming, stems, and timecode so teams can swap localized audio quickly.

Audio specs and formats

Use 48 kHz sample rate and 24-bit depth for masters. Use WAV (PCM) for delivery and archival, and high-bitrate MP3 for temporary review. Use the 48 kHz recommendation in professional pipelines, as advised by [AES recommended practice for professional digital audio].

Subtitle and timing

Export subtitles as SRT for simple workflows, and VTT if you need browser compatibility. Provide a timecoded transcript (frame-accurate timestamps) and a phoneme-aligned CSV for tight lip-sync. Include both source language cues and localized timing adjustments.

Middleware integration (Wwise, FMOD, Unity, Unreal)

Surface localized audio via middleware virtual banks or platform-specific audio tables. In Wwise, create language-specific SoundBanks and use events per scene. In FMOD, use multi-language banks and parameterize voice lines for runtime switching. For Unity, ship localized AudioClips plus SRT assets and use Addressables. In Unreal, store localized cues in the Localization Dashboard and map to dialogue assets.

DupDub module mapping and handoffs

-

Voice cloning: deliver voice model ID and sample assets to audio lead.

-

Dubbing (AI re-voicing): export WAV stems, SRT and timing CSV for post-sync.

-

STT/transcription: provide timecoded transcripts for QA and subtitle generation.

-

Subtitles: export translated SRT/VTT and frame-accurate timing files.

-

API: use for batch exports, programmatic pulls, and CI automation.

AI vs. Traditional dubbing: when to choose which (comparison + cost/time tradeoffs)

Choose the model that fits creative needs, schedule, and scale. This short guide compares full traditional dubbing, pure AI dubbing, and hybrid approaches. It shows likely turnaround, creative control, and cost tradeoffs for cinematic scenes and longer cut-scene batches.

Quick comparison table

|

Model

|

Creative control

|

Turnaround

|

Estimated cost per minute

|

Best for

|

|

Full traditional dubbing

|

Highest: human actors, director notes, nuance

|

Weeks to months

|

$$300$$2,000+

|

Triple-A cinematic, major franchises

|

|

Pure AI dubbing

|

Lower: fast, limited custom nuance

|

Hours to days

|

$$5$$150

|

Large volume, tight budgets, prototypes

|

|

Hybrid (AI + human polish)

|

High: AI draft, human tuning

|

Days to weeks

|

$$50$$600

|

Mid-size titles, episodic content

|

How to pick

Start with scope: how many minutes and languages. If you need exact lip match and actor emotion, pick full traditional. If you need speed across many languages, AI works well. If you want both scale and quality, use hybrid: AI drafts every line, then human actors or editors fix key scenes.

When to escalate to re-records

-

If player tests flag poor lip sync or wrong emotion, plan a re-record.

-

If translation changes timing by more than 15 percent, re-check performance.

-

If a character voice becomes a brand asset, invest in full human casting.

Case studies and example workflows (real & hypothetical)

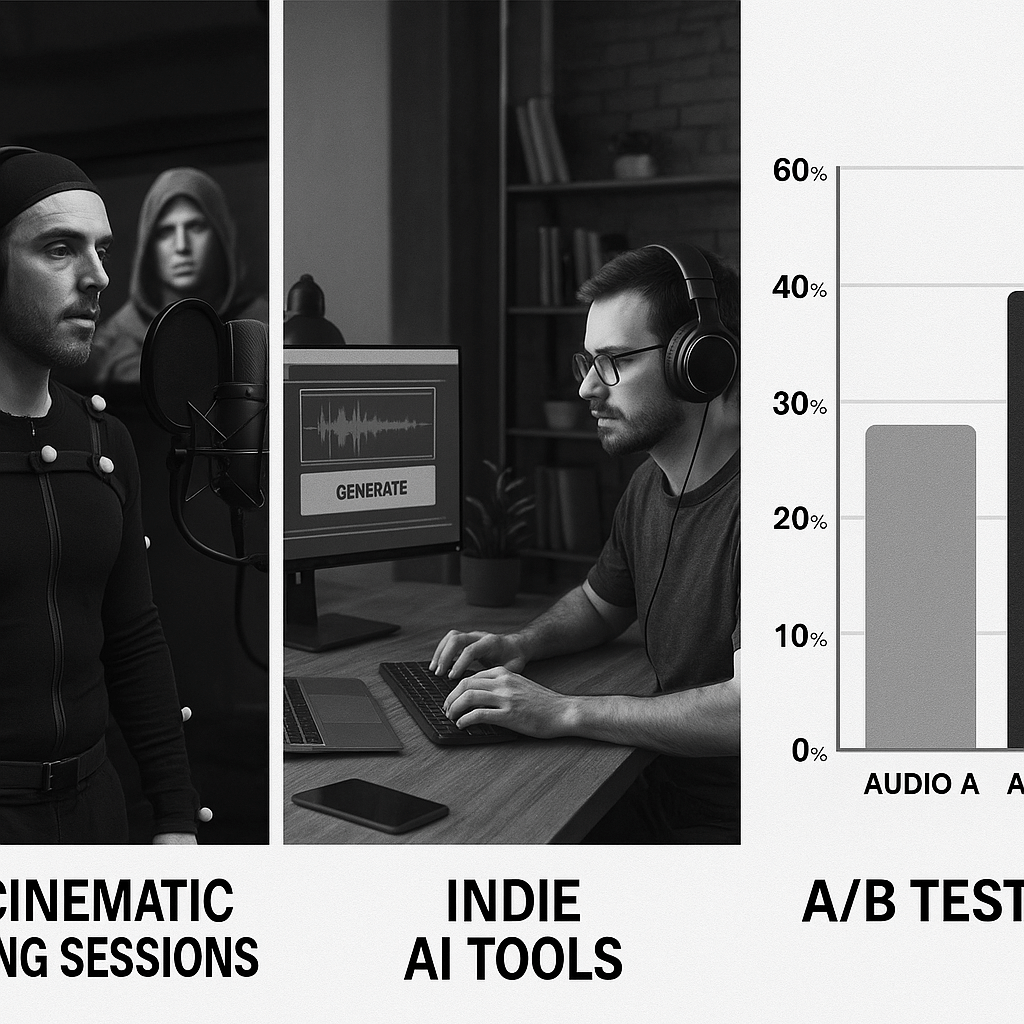

This section gives three compact, repeatable workflows teams can copy. It shows how game dubbing scales from AA/AAA pipelines to lean indie shops, and how A/B audio tests prove the value. Read short objectives, step lists, and measurable outcomes for each example.

AA/AAA success story: global cinematic rollout

Objective: Localize a 60-minute cinematic set with consistent performance.

Steps taken:

-

Lock script and temp subtitles.

-

Cast and record principal actors in target markets.

-

Use phoneme-aligned ADR (automated dialogue replacement) and mixer pass.

-

Final QA pass with localization lead and in-engine sync.

Measurable outcomes:

Indie studio walkthrough using DupDub end-to-end

Objective: Localize a 12-minute narrative trailer on a tight budget.

Steps taken:

-

Transcribe with DupDub, auto-translate the script.

-

Generate voice clones or pick TTS voices in DupDub.

-

Auto-align audio to subtitles, export WAV/MP4 and SRT.

-

Quick QA and ship localized builds.

Measurable outcomes:

A/B before/after audio demos

Objective: Measure lift from manual to AI-assisted dubbing.

Steps taken:

-

Create baseline dub, then produce AI-assisted version.

-

Run blind listener tests for lip-sync and emotional match.

Measurable outcomes:

FAQ — Practical answers to teams’ most common questions

-

How accurate is AI lip-sync for game dubbing?

AI lip-sync is effective for many cut-scenes when you supply clean audio and aligned transcripts. It matches phonemes to mouth movement, but small timing tweaks are usually needed. Try your scenes with a short demo and a downloadable QA checklist to confirm quality.

-

Is ethical voice cloning for video game dubbing allowed and how do we handle consent?

Get written consent or a talent release before cloning any voice. Limit clone use, log approvals, and keep access restricted to authorized staff. Follow studio legal rules and run a sample clone in a controlled demo environment.

-

What are localization QA best practices for cut-scene dubbing?

Run three passes: linguistic accuracy, timing and lip-sync, then in-engine playtests. Use native reviewers for performance notes and audio leads for mix checks. Keep a downloadable QA checklist handy for each language.

-

When should we re-record versus fix with AI for video game dubbing?

Re-record when performance, accents, or emotional nuance fail to meet the brief. Use AI fixes for timing, small ADR, or budget and schedule limits. Start with a quick audition or DupDub trial to compare cost and time tradeoffs.