TL;DR: Quick answers and action checklist

Want the short answer to is voice cloning legal? It depends on where you are and how you use it. Many places allow cloning for consensual, non-deceptive uses. Illegal uses include fraud, impersonation, or violating publicity or privacy rules.

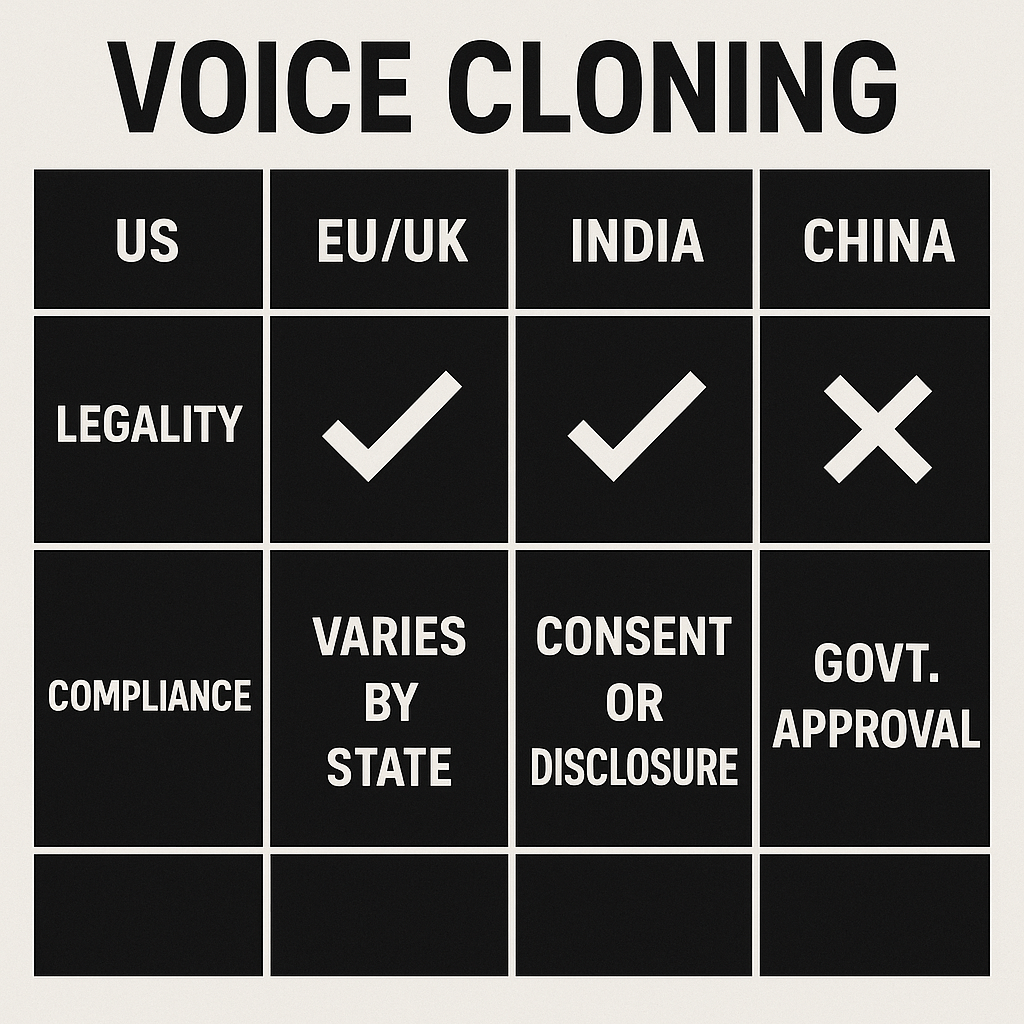

Regional snapshot: The US permits many uses but enforces publicity and fraud laws. EU and UK require clear consent and GDPR data safeguards. India has evolving, patchwork rules and variable enforcement. China enforces strict content controls and authentication requirements.

Top legal risks:

-

Consent and data protection.

-

Publicity and voice personality rights.

-

Fraud, impersonation, and deepfake rules.

-

Ownership of original recordings.

-

Vendor and contract liability.

Five-step starter checklist:

-

Get signed, recorded consent that lists permitted uses.

-

Log provenance: keep originals, timestamps, and audit trails.

-

Apply technical safeguards like watermarking and metadata tags.

-

Limit distribution and add clear disclaimers on synthetic audio.

-

Vet vendors for encryption, immutable logs, and deletion policies.

Next actions: prioritize consent for any public use, add technical watermarking, and run a vendor security check before launch.

What is voice cloning today? (short tech primer)

Voice cloning copies a real person’s voice so software can speak new words that sound like them. If you searched "is voice cloning legal" you probably want to know how the tech works and why it matters for creators. This short primer explains the data needs, core model types, and common workflows creators and teams use today.

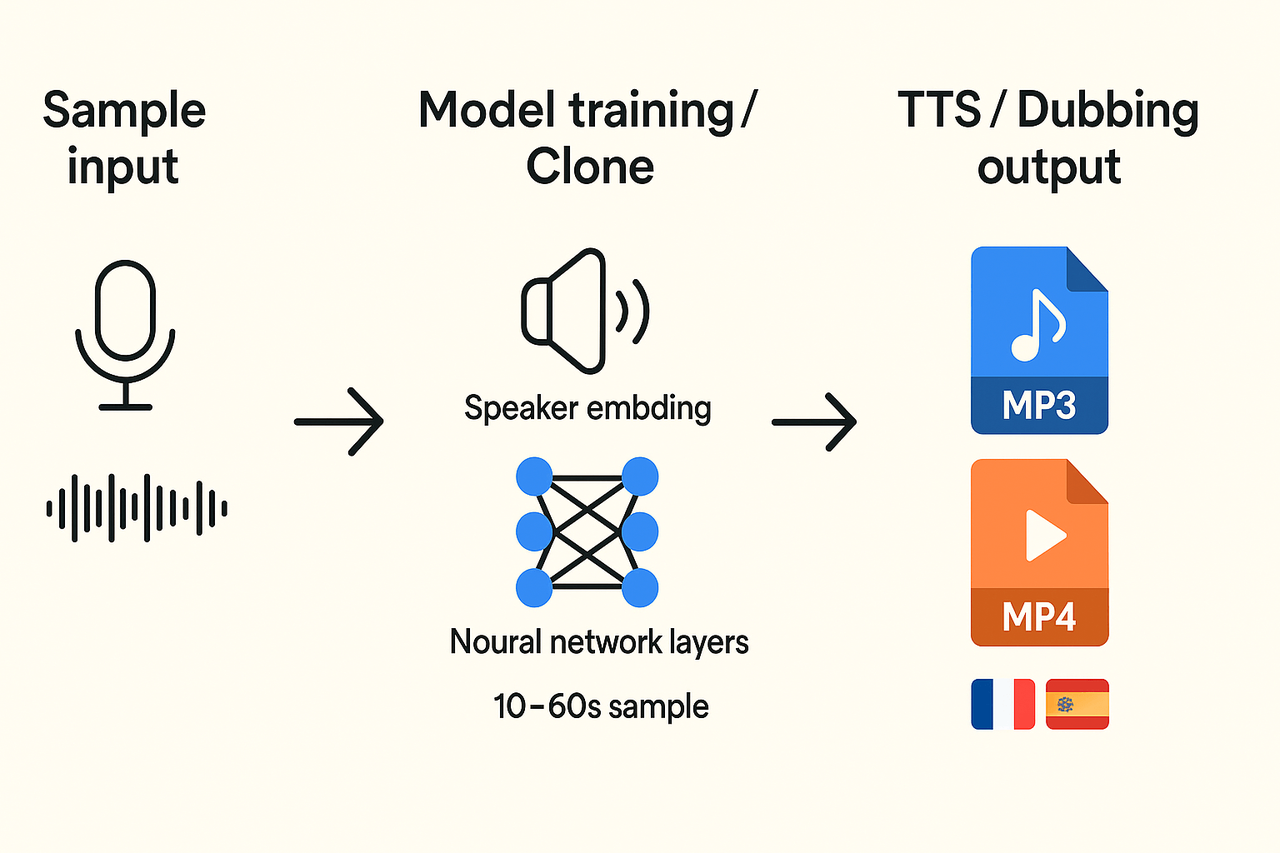

How it works: samples and training

Modern cloning starts by extracting voice features from an audio sample. Systems need a clean recording, often between 10 and 60 seconds, for a usable clone. More audio gives more natural results. The pipeline usually has two steps: a speaker encoder that captures voice traits, and a text-to-speech (TTS) engine that generates speech in that voice. A vocoder (software that turns spectrograms into sound) then produces the final audio.

Common model types

-

Speaker-conditioned TTS: the model takes text plus a speaker embedding (the voice fingerprint). This is the most common approach.

-

Voice conversion: it morphs one recording into another voice, useful for style transfer.

-

Diffusion and sequence models: newer systems use iterative generation for finer detail and realism.

Each type trades off data needs, latency, and naturalness.

Why this matters for creators and teams

-

Faster dubbing and localization, you can translate and re-voice videos at scale.

-

E-learning and training: keep the same instructor voice across languages and modules.

-

Accessibility: produce audio descriptions and screen-reader voices that match brand tone.

-

Iteration and cost: record once and update scripts without rebooking talent.

Common outputs are MP3 or WAV files and synced MP4 video with subtitles. That makes voice cloning fit directly into editing, localization, and publishing workflows.

Legal landscape 2025 — core laws, standards, and trends

Is voice cloning legal is a common question for creators and teams. The short answer is: it depends on use, consent, and where you operate. This section maps the main legal risks and rules you must check before cloning a voice.

Consent and publicity rights: get clear, written permission

Consent is the single most important legal guardrail. Publicity rights (the right to control commercial use of a person’s voice or likeness) vary by place, but most disputes start with missing or vague consent. For practical projects, get a signed consent that names each use, language, territory, and duration. If you can, record a verbal consent on camera too. When the speaker is a public figure, assume stricter rules and higher damages.

Key consent clauses to include:

-

Identity and contact for the speaker and operator.

-

Exact uses allowed: ads, training, derivatives, and resale.

-

Duration and territory, including sublicensing rights.

-

Revocation terms and any fees for removal.

Privacy and data protection: treat voice data as personal data

Voice prints are biometric-like and often qualify as personal data. This triggers privacy law duties like data minimization and secure storage. For EU projects, include a lawful basis for processing and a retention limit. For user-facing apps, add a clear privacy notice and an opt-out process.

Practical controls to add:

-

Limit recordings to the minimum clip length.

-

Encrypt stored samples and keys.

-

Maintain a deletion log and SLA for removal requests.

Fraud, deepfakes, and consumer protection: avoid harms

Regulators are focusing on synthetic media that cause fraud or harm. If outputs can mislead, you need labels and contextual disclosures. Platforms may face liability if they host harmful content without mitigations. So plan for misuse cases and mitigate them up front.

Quick steps to reduce risk:

-

Watermark or tag synthetic audio.

-

Build a detection and takedown process.

-

Run a risk assessment for fraud vectors.

Copyright and training data: check rights for models and inputs

Using copyrighted recordings to train a model can trigger infringement claims. Also, generated speech that mimics copyrighted performances can raise new disputes. Keep provenance records for every training sample and use licensed or consented material only. If you license third-party voices, clarify derivative rights in writing.

Checklist items:

-

Maintain a training-data ledger.

-

Require vendors to warrant license rights.

-

Avoid unlicensed commercial training samples.

Emerging laws and voluntary standards: what changed in 2024–2026

According to

European Commission Report (2025) "The AI Act entered into force on 1 August 2024 and will be fully applicable 2 years later on 2 August 2026, with some exceptions: prohibitions and AI literacy obligations entered into application from 2 February 2025, the governance rules and the obligations for general-purpose AI models become applicable on 2 August 2025, and the rules for high-risk AI systems - embedded into regulated products - have an extended transition period until 2 August 2027." In the US, agencies like the FTC push disclosure and anti-fraud rules. Several states keep passing voice-consent statutes. Voluntary standards are also emerging, covering watermarking, provenance metadata, and audit logging.

What this means for vendors and operators:

-

Vendors must show governance, risk logs, and model cards.

-

Operators should keep provenance, disclosures, and consent records.

-

Non-compliance can trigger fines, takedowns, and civil claims.

Practical effect on projects and operator liability

In practice, treat voice cloning as a regulated media activity. Plan for contract controls, technical safeguards, and user notice. Keep your legal team in early. Document every consent, every training asset, and every output. If you follow minimal steps, you cut most legal risk and keep your project audit-ready.

-

Create a consent template and retention policy.

-

Use vendor warranties and SOC-type reports.

-

Build labeling, watermarking, and a takedown flow.

Voice cloning tools are changing fast, and the legal ground is shifting with them. This section maps key risks in 2025 and explains how they affect real projects. If you asked, "is voice cloning legal," the short answer depends on consent, who owns the voice, and where you publish.

Consent and publicity risks

You need clear permission to clone or use a real person’s voice. Without it, projects risk claims under right of publicity rules and state or country privacy laws. For creators, that means getting documented, narrow consent that names uses, languages, and distribution channels.

Practical consent checklist:

-

Get written consent that lists specific uses, territories, and time limits.

-

Record proof of identity for the consenting speaker.

-

Include revocation rules and explain any retained backups.

-

Map consent to each asset in your asset management system.

Privacy, fraud, and biometric protections

Voiceprints are biometric data in many laws, so handling them triggers extra duties. Regulators focus on fraud risk, like scams that impersonate officials or finance teams. For teams, this raises both legal and reputational exposure.

Mitigations to apply now:

-

Treat voice samples as sensitive data, apply encryption at rest and in transit.

-

Use limited retention schedules and secure deletion after cloning tasks end.

-

Require multi-factor verification for the distribution of cloned audio files.

-

Add disclaimers and visible provenance markers in published media.

Copyright, licensing, and third-party content

Cloning a voice does not remove copyright or license obligations for the script or underlying performance. If a target recording contains copyrighted content, you may need a license. Also, derivative rights can be asserted by performers or talent unions.

Steps to reduce IP risk:

-

Confirm you own or have licensed the script and source recording.

-

Add contract clauses assigning or licensing synthetic voice outputs.

-

Keep metadata showing which voice and dataset created each file.

Quick risk vs mitigation table

|

Risk

|

Who it affects

|

Practical mitigation

|

|

Unauthorized cloning

|

Creators, publishers

|

Written consent, identity proof

|

|

Biometric data exposure

|

Data controllers

|

Encryption, retention limits

|

|

Fraud/impersonation

|

End users, customers

|

Watermarks, distribution controls

|

|

Copyright claims

|

Publishers, clients

|

Clear licences, metadata trail

|

New laws and industry standards to watch

Policy makers and industry bodies are adding specific rules. Expect tighter biometric rules, AI transparency mandates, and new disclosure requirements for synthetic media. Voluntary standards like watermarking and detection best practices are gaining traction across vendors. Vendors will be asked for processing records, model provenance, and audit logs.

Practical effect on projects Plan for more paperwork, stronger data controls, and built-in provenance. Treat vendor contracts as compliance tools, not just commercial terms. If you design consent, storage, and publishing workflows now, you lower both legal risk and production delays.

Jurisdictional comparison: US, EU/UK, India, China (practical differences)

Creators often ask, is voice cloning legal across markets? The short answer varies by place, and the differences change how you record, store, label, and publish synthetic audio. This section maps practical rules you must follow in the United States, the EU and UK, India, and China, focusing on what alters day-to-day work.

United States: right of publicity, fraud risk, and state mix

In the US, two legal threads matter most: right of publicity (control over your voice or likeness) and fraud or impersonation laws. Right of publicity is mostly state law, so permission rules change by state: some require written licenses, others are narrower. Federal fraud or wiretap laws can apply if cloning is used to mislead or commit financial fraud. Practically, get a clear written release and keep provenance records like source audio, consent logs, and usage notes.

EU and UK: strict data rules and new AI rules, focus on purpose and transparency

Privacy rules shape daily workflows in Europe and the UK. Article 5(1)(b) of

Regulation (EU) 2016/679 (GDPR) stipulates that personal data must be collected for specified, explicit, and legitimate purposes and not further processed in a manner incompatible with those purposes. That means you must document why you needed the voice data, limit uses, and keep deletions and access processes ready. The EU AI Act adds risk-based controls for high-risk voice uses, like deepfake political messaging, so expect requirements for documentation, transparency, and human oversight.

India: emerging guidance and evidentiary gaps

India has no single federal voice-cloning law yet, but courts and regulators are moving fast. Expect rules that borrow from privacy and telecom laws, and watch for sector rules in banking and education that ban impersonation. Evidence issues are real: courts are still learning to treat synthetic speech as admissible proof, so preserve metadata, transcripts, and chain-of-custody details when you produce cloned audio.

China: strict control, security reviews, and platform policing

China treats synthetic media as sensitive. Platforms often require real-name verification, explicit consent, and may demand security filings for services that produce synthetic voices at scale. Expect quick takedowns if content is flagged as fraudulent or politically sensitive. For cross-border projects, assume extra scrutiny and prepare localized consent and export controls.

Quick practical checklist (what changes your daily workflow)

|

Task

|

US

|

EU/UK

|

India

|

China

|

|

Written consent

|

Strongly recommended

|

Required when processing personal data

|

Recommended, becoming standard

|

Usually required by platforms

|

|

Purpose documentation

|

Best practice

|

Mandatory under GDPR

|

Helpful, supports admissibility

|

Often required for reviews

|

|

Store provenance metadata

|

Recommended

|

Required for audits

|

Critical for evidence

|

Critical for platform checks

|

|

Criminal fraud risk

|

Varies by state

|

Covers misuse under AI rules

|

Growing enforcement

|

High enforcement risk

|

Keeping simple records solves most cross-border problems: a signed consent, a purpose statement, original audio, and a log of processing steps. These items turn legal uncertainty into a repeatable compliance process.

Consent, publicity rights, and IP — practical rules and templates

Creators and teams often ask, Is voice cloning legal and What consent do I need to clone a voice? The short answer is: you usually need explicit, recorded permission from the speaker for commercial or public uses, plus separate clears for publicity and IP where relevant. Below are practical rules, short templates you can adapt, and fast checks teams can use before cloning a voice.

When do you need consent?

-

Private use: low risk, but still get consent for clarity and audit trails.

-

Commercial distribution: always obtain written consent that covers the specific uses, territories, and duration.

-

Employee or contractor voices: Use a signed work-for-hire or license clause so rights are clear.

What counts as valid consent?

Valid consent must be: informed, specific, and recorded. That means the speaker gets a plain-language explanation of how the clone will be used, who can access it, how long it will exist, and whether it can be sublicensed. Best practice: audio or video recording plus a short signed form. Keep metadata and the original source file as evidence.

Public figures and deceased voices

Public figures have reduced privacy protections in many places, but publicity rights and trademark claims still matter. You may need a publicity clearance for commercial use even if the person is famous. For deceased voices, check state or local laws: some places recognize post-mortem publicity rights, so you’ll often need estate approval.

Licensing versus ownership of synthetic voices

Cloned voices are usually licensed, not owned. Your vendor or creator may retain underlying model IP while you get a use license. Make sure the license covers: commercial use, redistribution, derivative works, and termination terms. Ask for exportable copies or escrow arrangements for enterprise continuity.

Quick consent clause: adaptable snippet

I grant [Company] a worldwide, royalty-free, transferable license to create, use, modify, and distribute synthetic voices based on my recorded voice for the purposes described here. This license includes the right to sublicense and to store copies for backup and compliance.

Use that clause as a starter in your editable template. For teams, keep a simple checklist: speaker ID, scope, duration, storage, deletion trigger, and estate/contact info for post-mortem cases.

Find a downloadable consent template and an editable clause in the Voice Cloning Compliance cluster to adapt for hires and external talent.

Technical safeguards: watermarking, detection, and forensics

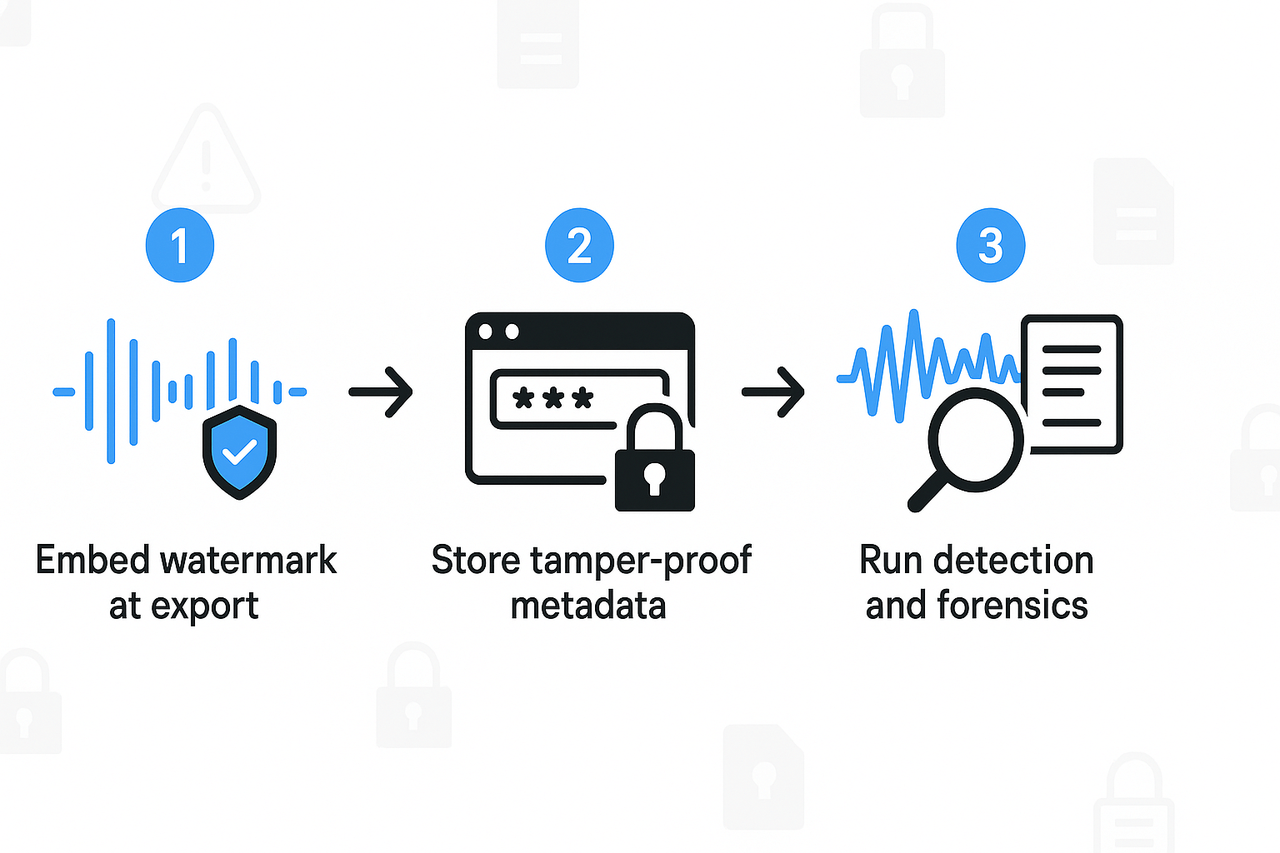

Start with controls that reduce misuse risk. If you’re asking is voice cloning legal, technical safeguards don’t replace consent and policy, but they cut risk and strengthen compliance evidence. This section explains watermarking approaches, detection tools, basic audio forensics steps, and operational controls you can test quickly.

Watermarking options and how to use them

Watermarks embed a hidden signal into audio so you can prove the origin. Use two kinds: robust (survives editing) and fragile (flags tampering). Robust watermarks survive compression and minor edits. Fragile watermarks help detect edits or splices.

Best practices

-

Embed the watermark at export, not earlier. That locks the signed copy.

-

Combine inaudible spectral marks with tamper-proof metadata. Metadata alone is easy to strip.

-

Link each watermark to a unique consent record or speaker ID.

Detection tools and automated checks

Detection uses model fingerprints, spectral analysis, and metadata verification. Run automated scans on uploads and before distribution. Use thresholds to flag likely synthetic audio and queue higher-risk files for manual review.

Common checks

-

Fingerprint matching against known synthetic profiles.

-

Spectral anomaly detection for artifacts from synthesis.

-

Metadata and provenance chain verification.

Quick audio-forensics steps to follow after a report

-

Preserve the original file in a write-once location. Don’t re-encode it.

-

Run your automated detector and save the report.

-

Extract the watermark and compare it to the consent records.

-

Document the chain of custody and timestamps.

-

Escalate to legal if the watermark is missing or mismatched.

Operational controls that matter

Encrypt audio in transit and at rest. Use role-based access and strict key management. Keep immutable logs for processing and export events. Apply short retention windows and delete idle clones tied to expired consent.

Simple testing plan for watermark durability and detection

-

Export a watermarked clip.

-

Re-encode to MP3, lower bitrate, and back to WAV.

-

Apply speed, pitch, and background noise edits.

-

Trim and splice segments.

-

Run detection after each transform and record the detection rate.

-

Adjust the embedding strength until you meet a target detection rate.

These controls help you prove origin, detect misuse, and meet compliance needs. Test regularly and log results for audits.

How DupDub supports compliant voice cloning — features & step-by-step workflow

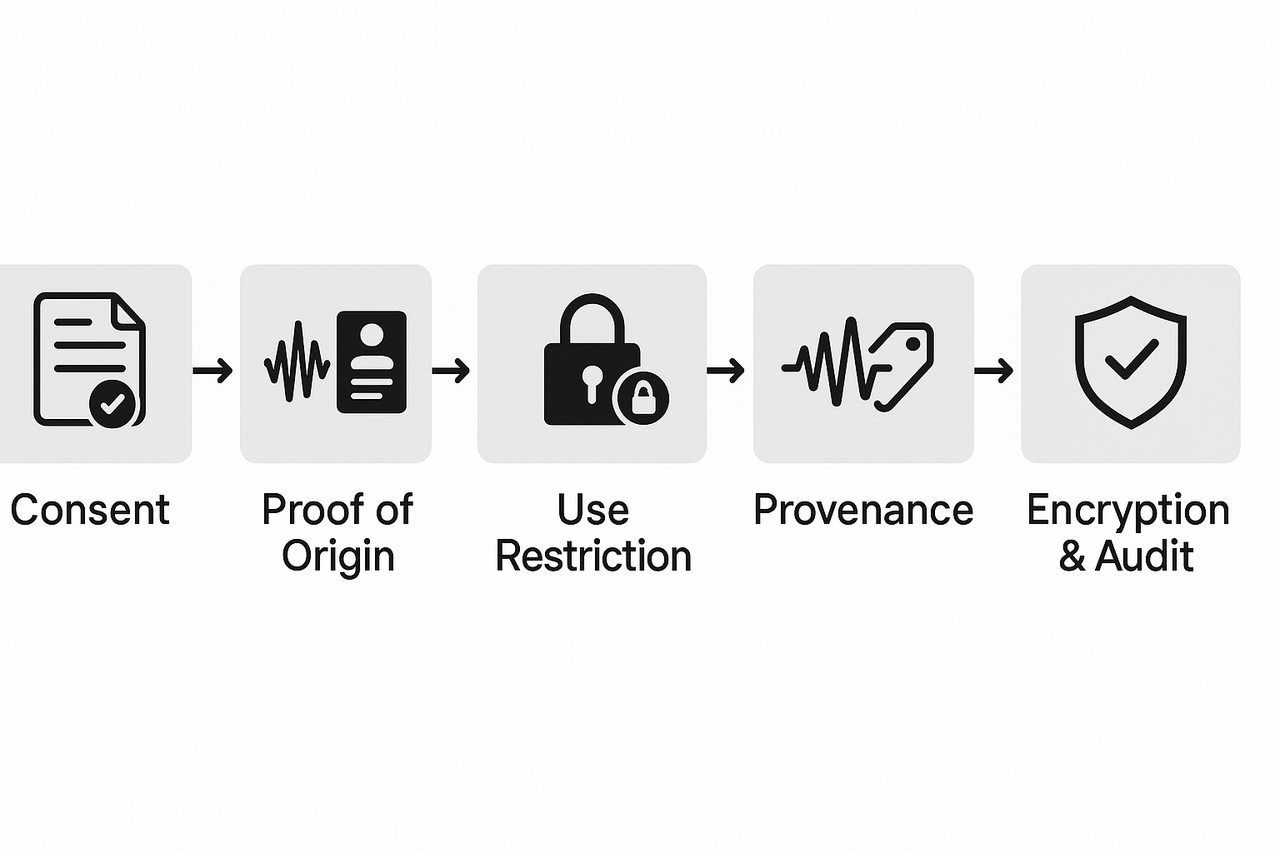

You need a clear, auditable process that ties legal rules to tool controls. This section shows a practical workflow you can run today, mapped to core compliance steps like consent, proof of origin, and secure export. If you’re asking, "Is voice cloning legal?", this checklist shows how to reduce legal risk while staying productive.

Map of compliance controls to DupDub features

Use these features to meet legal and policy needs:

-

Consent collection: built-in consent capture and upload field, with user signature metadata. Keep signed forms linked to each clone.

-

Voice locking: clones locked to the original speaker so they can’t be reused without permission.

-

Watermarking and metadata: audible watermark plus file metadata for traceability.

-

Encryption and secure export: end-to-end encryption for stored and exported assets.

-

Audit logs and role controls: full activity log, permissioned access, and user roles for reviewers.

Step-by-step project workflow, linked to legal tasks

Follow these numbered steps for a single project. Each step lists the legal control it supports:

-

Prepare and get consent (legal control: informed consent, publicity rights)

-

Use a downloadable consent template, fill in the scope, languages, and use cases.

-

Upload the signed PDF into DupDub’s project and attach it to the speaker profile.

-

Tag consent with retention and expiry dates.

-

Collect voice sample and verify identity (legal control: proof of origin)

-

Record a 30-second sample in DupDub’s browser studio, or upload an audio file.

-

Add an ID check note and a timestamped entry to the audit log.

-

Create a locked clone (legal control: use restriction, chain of custody)

-

Choose cloning settings and enable voice locking so the clone is bound to the speaker.

-

Limit clone usage to specific projects or API keys.

-

Embed watermark and metadata (legal control: provenance and attribution)

-

Turn on audible watermarking for draft exports and embed machine-readable metadata.

-

Watermarks help prove synthetic origin in disputes and platform reviews.

-

Apply security controls and encryption (legal control: data protection)

-

Enable at-rest and in-transit encryption for all clone assets.

-

Set role-based access so only approved people can generate audio.

-

Export with audit trail (legal control: nonrepudiation, retention)

-

Export audio as encrypted WAV or MP3 and include a sidecar metadata file.

-

Store the export record in DupDub’s audit log with user ID and timestamp.

-

Retain, revoke, or delete (legal control: data minimization and revocation)

-

Follow your retention policy and use DupDub’s delete or revoke options when needed.

-

Log deletions for compliance reporting.

Quick tips for legal teams and creators

-

Always attach the signed consent to the clone record.

-

Keep watermarked drafts for review; unwatermarked final files only if the contract allows.

-

Use short retention windows for voice samples you don’t need.

Vendor vetting checklist & red flags (what to ask vendors)

When assessing vendors, teams need a short, action-oriented checklist that covers legal, technical, and operational risk. Ask clear questions about consent handling, watermarking, data deletion, and liability. If you’re wondering "is voice cloning legal" the vendor should show how their features support compliance, not just marketing claims.

Must-ask checklist

-

Consent mechanics: How is consent captured, time-stamped, and stored? Ask for a demo of the consent UI and downloadable audit record.

-

Watermarking and detection: Do you support inaudible audio watermarks and forensic markers tied to an account or clone ID?

-

Clone revocation: Can a cloned voice be disabled or destroyed on request? What is the revocation SLA?

-

Data deletion and retention: How do you delete voice samples and training artifacts? Request a written retention schedule.

-

Encryption and access control: Is data encrypted at rest and in transit? Describe role-based access and key handling.

-

Audit logs and exportability: Are logs immutable and exportable for audits or e-discovery?

-

SLA and uptime: What SLAs cover availability, incident response, and breach notification?

-

Indemnity and liability: Does the contract include indemnity for IP and privacy claims? Get limits in writing.

-

Training data policy: Will my uploads be used to train third-party models? Ask for a signed prohibition if required.

-

Forensics support: Will you assist with legal forensics and provide markers or samples on demand?

Red flags to watch for

• No watermarking or detection tools. • No clone revocation or deletion guarantee. • Vague or absent audit logs. • Data shared with unnamed third parties. • No written indemnity or overly narrow liability. • No encryption or weak access controls. • Refusal to show a consent workflow demo. • Black box training claims with user uploads used for model training.

Run a short proof of concept and ask for sample logs and a signed data handling addendum before procurement.

FAQ — quick answers to People Also Ask and common audience questions

-

Is voice cloning legal without consent?

Often illegal for commercial use, and it can trigger civil or criminal claims. Laws vary by country and state, so risk is context-dependent. Obtain signed consent and use the downloadable consent template in the Consent, publicity rights, and IP section.

-

How can I clone a celebrity voice legally?

You need a license from the celebrity or their estate before cloning a public figure. Secure publicity releases or impersonation licenses; do not rely on fair use defenses. See the Jurisdictional comparison and Vendor vetting checklist for step-by-step actions.

-

How do I verify synthetic audio and detect AI voice?

Use audio watermarking, spectrogram checks, and automated AI detectors to spot synthetic speech. Keep originals, metadata, and chain of custody logs for forensic checks. See Technical safeguards for tools and an audio watermark demo.

-

Is AI voice cloning legal for e-learning and enterprise training?

Yes, with explicit written consent, limited scope, and clear retention rules. Lock clones to the speaker, encrypt files, and log use for audit trails. See the DupDub workflow and downloadable compliance checklist for a practical setup.