TL;DR — Key takeaways

Why multilingual TTS matters for eLearning accessibility and engagement

Practical use cases and measurable outcomes

-

Localization for global teams: Generate native-language audio and timed subtitles, cut translation turnaround from weeks to hours. Measurable outcome: reduce localization time by X days and increase completion rates in non-English cohorts.

-

Remediation for learners with reading challenges: Deliver narrated lessons, slow-speed versions, and adjustable voices to support dyslexia, low literacy, or visual impairment. Measurable outcome: boost comprehension quiz scores and reduce dropout for at-risk learners.

-

Bilingual cohort delivery: Provide side-by-side audio and captions so bilingual learners toggle language on demand. Measurable outcome: higher learner satisfaction scores and faster onboarding in mixed-language cohorts.

-

On-demand microlearning and review: Auto-generate short audio summaries for review, which increases repetition and retention. Measurable outcome: improved recall in spaced-repetition checks.

How DupDub enables accessible, multilingual eLearning

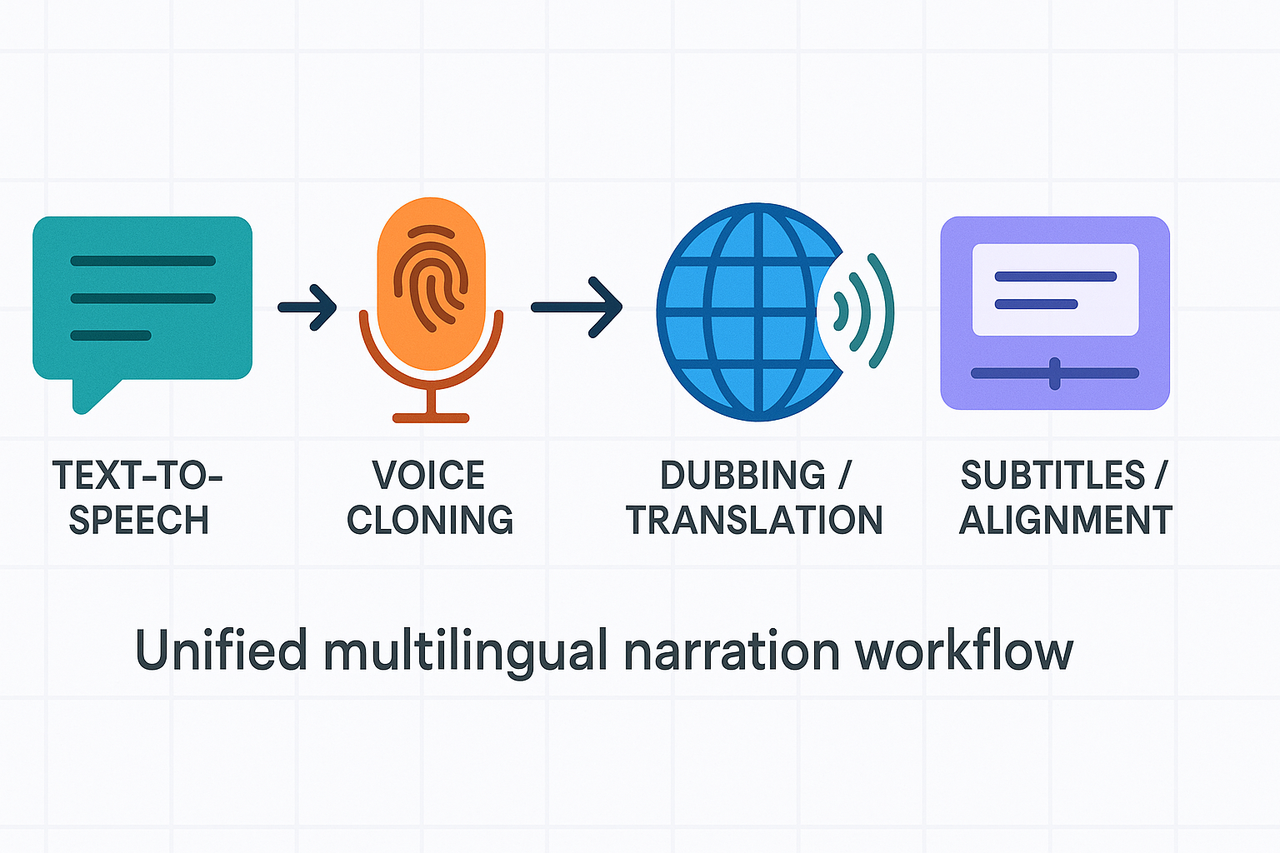

Core modules, in one unified workflow

-

Text to Speech: convert script text to natural narration with selectable voices and styles.

-

Voice cloning: create a brand or instructor voice from a short sample for consistent course narration.

-

Dubbing and translation: translate and synthesize audio per locale, while keeping timing and tone.

-

Subtitles and alignment: generate, edit, and time captions automatically for accessibility and localization.

-

Video editing and export: stitch audio, captions, and video, then export ready assets for LMS upload.

Supported languages and export formats

Security and privacy for voice data

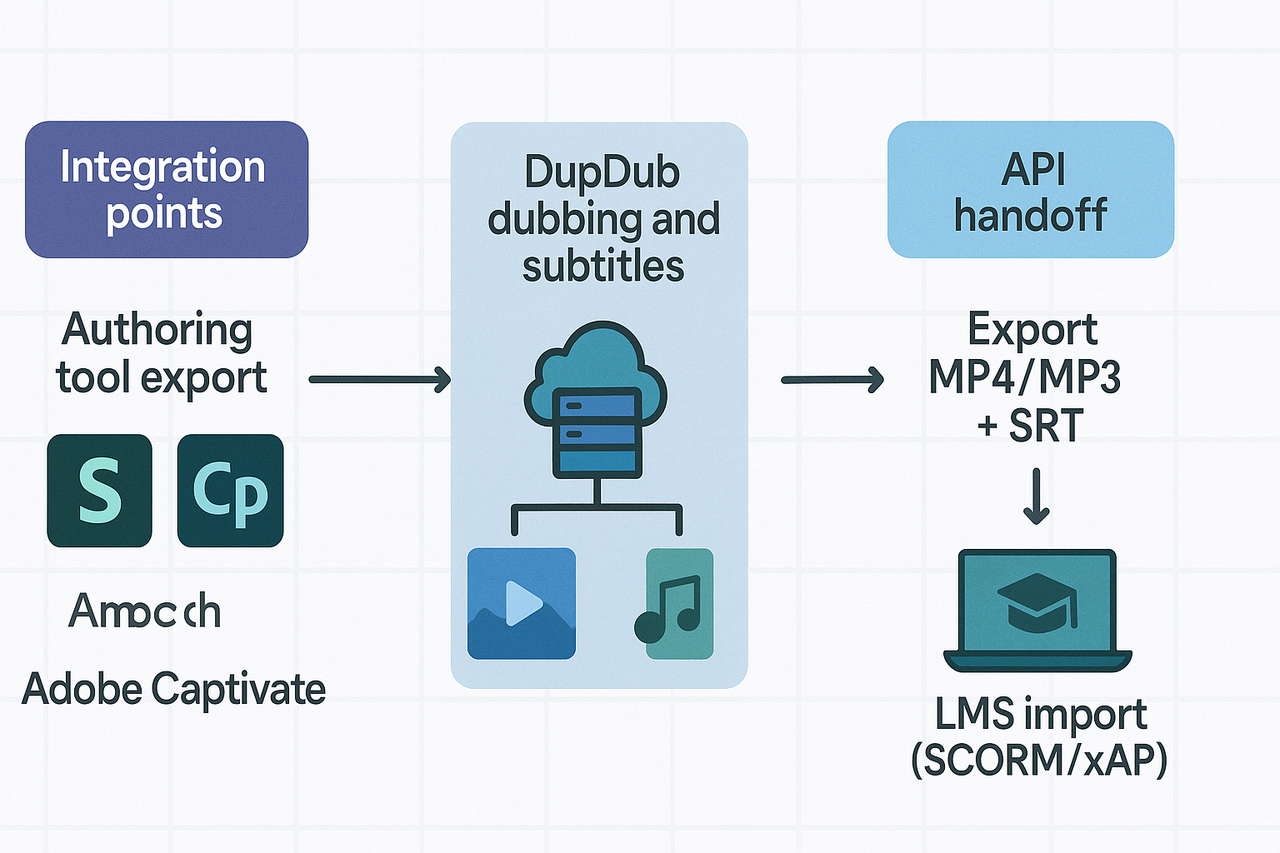

Where this fits in typical eLearning pipelines

-

Author creates or imports script in an authoring tool.

-

Export text to the platform for TTS or cloning, then pick a voice and language.

-

Generate audio and auto-align subtitles, then review and edit timing.

-

Export MP3/WAV, MP4, and SRT files and import them back into the authoring tool.

-

Publish the final SCORM/xAPI package or MP4 to the LMS.

How to evaluate TTS quality for eLearning: an accessibility checklist

Quick scoring method

Checklist (with test steps and pass/fail criteria)

-

Naturalness and listener comfort

-

Test: Play a 60-second script to three listeners.

-

Pass if mean naturalness ≥4 and no listener reports robotic tone.

-

-

Intelligibility at varied speeds

-

Test: Play script at normal, +15%, and -15% speed.

-

Pass if word recall ≥80% across speeds.

-

-

Pronunciation control and phonemes (SSML)

-

Test: Apply phoneme tags and custom lexicon to 10 known trouble words.

-

According to Speech Synthesis Markup Language, SSML standardizes speech controls for pronunciation and prosody. Pass if all 10 words sound correct.

-

-

Pacing and breath placement

-

Test: Check pauses after bullets and before new ideas.

-

Pass if pause lengths match script intent and improve comprehension.

-

-

Emotional range and styles

-

Test: Generate the same line in neutral and two emotional styles.

-

Pass if listeners correctly identify tone 70% of the time.

-

-

Caption and transcript accuracy

-

Test: Export SRT and compare to script.

-

Pass if subtitle error rate ≤5%.

-

-

Privacy, compliance, and data control

-

Test: Verify encryption, usage terms, and voice cloning policy.

-

Pass if the vendor allows commercial use and protects voice uploads.

-

Step-by-step tutorial: Create a multilingual eLearning voiceover with DupDub

Quick overview

-

Prepare the script and timing

-

Break the script into short, natural lines for each slide or screen. Keep lines under 12 words when possible.

-

Add timing notes in brackets, for example [pause 0.6s] or [slow].

-

Save a master script as CSV or SRT, matching slide IDs if you use an authoring tool.

-

-

Generate a voice or clone

-

Choose a voice from the library, or upload a single-speaker sample to clone a narrator.

-

Test a short paragraph first, check pronunciation, tone, and locale.

-

Use voices matched by accent and formality to improve learner trust.

-

-

Apply emotion, style, and pacing

-

Tweak style controls: energy, warmth, and pitch to match content type.

-

Use short SSML (speech synthesis markup) tags or the tool's style presets to add pauses and emphasis. Explain SSML (markup that controls speech) if reviewers are new.

-

Preview audio with the actual slide to confirm pacing.

-

-

Export audio and SRT for LMS import

-

Export single-track WAV or MP3 for each slide, or a single MP3 plus aligned SRT captions.

-

DupDub allows users to export voiceovers as MP3, MP4, or SRT files, ready for use in any platform or workflow.

-

Name files with slide IDs (slide_01.wav, slide_01.srt) for smooth LMS or authoring tool import.

-

-

Batch dubbing and localization workflow

-

Translate source SRTs, then run batch voice generation for each language.

-

Keep one timing master per language to avoid re-sync cycles.

-

Use short reviewer windows: review audio + captions together to cut rounds.

-

-

Package for SCORM or xAPI

-

Replace original audio assets in your authoring file (Articulate, Captivate).

-

Upload the updated package to the LMS. SCORM and xAPI are course packaging and tracking standards (SCORM sends module progress, xAPI tracks detailed learner actions).

-

-

Match filenames to slide IDs.

-

Confirm captions align with audio.

-

Run a final QA pass in the target LMS environment.

-

Keep a glossary of brand and technical terms for consistent pronunciation.

-

Use small A/B voice tests with learners to pick the best voice.

-

Automate repeats via API when you update scripts at scale.

Integrating DupDub with authoring tools and LMS (sample workflows)

Articulate Storyline workflow

-

Export slides and narration scripts as one CSV or per-slide text. Keep filenames simple.

-

In the platform, pick language, voice, and style, then batch-generate MP3/WAV plus SRT files.

-

Download audio and captions, named to match slide IDs.

-

In Storyline, import audio to each slide: Insert > Audio > Slide Audio.

-

Add captions using the built-in caption editor, or import SRT where supported.

-

Preview and adjust slide timing to follow the new audio. Simple, repeatable, and fast.

Adobe Captivate workflow

-

Export slide notes or caption files from Captivate. Use per-slide text files or a single CSV.

-

Upload text to the tool and request MP3/WAV + SRT. Choose a sample rate of 44.1 kHz for best compatibility.

-

In Captivate, use Audio > Replace or Import to attach voiceovers to slides.

-

Import SRT as closed captions or paste into the caption panel.

-

Sync timing in the Audio Management view and test with the slide playhead.

SCORM and xAPI packaging tips

-

Include both MP3 and SRT in the package. Name files identically except extensions (course01.mp3 and course01.srt).

-

For MP4 lecture videos, embed captions as SRT and provide a separate transcript text file.

-

Ensure manifest references match filenames so LMS picks up captions and audio.

API automation for batch dubbing

-

Export scripts and metadata (slide IDs, duration) as CSV.

-

Use the API to request voice generation and SRT per row.

-

Auto-attach returned assets to SCORM/xAPI manifests, or push them to LMS via LTI or the LMS API.

DupDub vs other Text-to-Speech tools for eLearning — side-by-side comparison

Evaluation criteria

-

Voice quality: naturalness, emotion control, and preset styles. High-quality models reduce rework in narration.

-

Language coverage: supported languages and accents, plus subtitle and STT support.

-

Pricing model: credits, subscription tiers, and commercial use limits.

-

Integrations: LMS, authoring tools, APIs, and plugin support.

-

Automation and scaling: batch dubbing, API access, and CI/CD-friendly workflows.

Side-by-side comparison table

|

Criteria

|

DupDub

|

WellSaid

|

Speechify

|

Google / Azure TTS

|

|

Voice quality

|

Large library, 1000+ styles, emotional control

|

Studio-grade, very natural

|

Clear, tuned for speed listening

|

Very high-quality neural voices

|

|

Language coverage

|

90+ TTS languages, 47 cloning languages

|

20+ languages, strong English variants

|

30+ languages, consumer focus

|

45+ languages, 110+ voices

|

|

Voice cloning

|

Fast, multi-language cloning

|

Limited cloning options

|

No cloning, focus on the reader app

|

Cloning via advanced SDKs

|

|

Integrations

|

API, Canva, YouTube plugin, SRT

|

LMS via export, some APIs

|

Browser/mobile apps, limited APIs

|

Robust cloud SDKs and plugins

|

|

Pricing model

|

Credit-based tiers, free trial

|

Subscription tiers, enterprise quotes

|

Freemium with premium plans

|

Pay-as-you-go cloud billing

|

|

Automation

|

Batch dubbing, API orchestration

|

Enterprise pipelines, limited dubbing

|

Not built for batch dubbing

|

Strong automation, cloud-native

|

When DupDub is decisive, and when to choose an alternate

Mini case studies & quotes from instructional designers

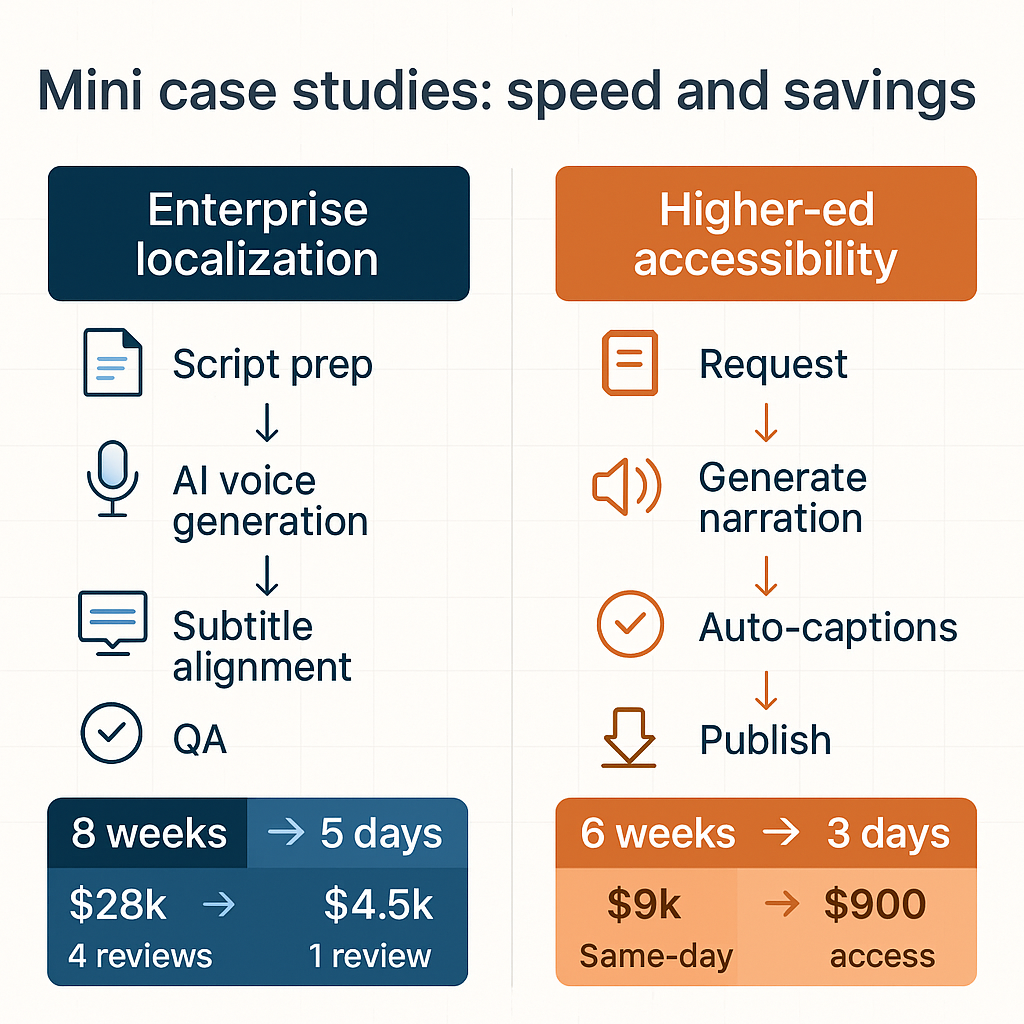

Enterprise training localization: shrink global rollout time

-

Before: 8 weeks per language, vendor cost $28,000, 4 reviewer cycles.

-

After: 5 days per language, internal cost $4,500, 1 reviewer cycle.

-

Outcome: 80% faster turnaround and 84% lower localization spend.

Higher ed accessibility: scale narration and captions affordably

-

Before: 6 weeks for a course update, $9,000 production cost, and students waited for accommodations.

-

After: 3 days for the same update, $900 production cost, same-day access for students.

-

Outcome: 90% cost reduction and faster accommodation delivery.

Designer voices: reviewer cycles, learner feedback, adoption

-

"Reviewer rounds fell from four to one. That freed weeks for new content." — Instructional designer, enterprise L&D.

-

"Students thanked us for same-day captions and audio. Accessibility complaints dropped." — Accessibility manager, higher ed.

-

"Adoption rose fast because our SMEs accepted synthetic voices quickly." — Senior ID, curriculum team.

FAQ — common questions about TTS for eLearning and DupDub

-

Is AI voice accuracy good enough for TTS e-learning?

Human-like accuracy varies by language, voice model, and how you edit prosody. Use SSML (speech markup) and short A/B tests with native speakers to tune pronunciation and pacing. Next steps: pilot a short module and measure comprehension and learner satisfaction.

-

Is voice cloning legal and safe with AI voice for eLearning?

Cloning needs clear consent from the speaker and a written use agreement. Keep source files and credentials private, and check that any vendor restricts uploads to the original speaker. Treat cloned voices like recorded talent, with the same rights and approvals.

-

Can Text-to-Speech Tools for E-Learning meet ADA and WCAG requirements?

Yes, when you pair clear speech with captions, keyboard controls, semantic structure, and adjustable playback speed. Test with screen readers and follow our downloadable accessibility checklist to verify compliance. Use transcripts and time-aligned subtitles for full accessibility.

-

What should I check about pricing and licensing for Text-To-Speech Software For eLearning?

Compare models, since vendors use subscription, credit, or pay-per-use pricing. Look for these items: - commercial use and redistribution rights - voice cloning and API access - export formats like MP3, WAV, and SRT

-

How do I try the tool and get support for multilingual TTS and DupDub?

DupDub offers a short free trial and tiered plans with credits for voiceover, dubbing, and cloning. For implementation, review the API docs, request a demo, or test voice cloning on a pilot course. Also download the accessibility checklist to guide evaluation.