-

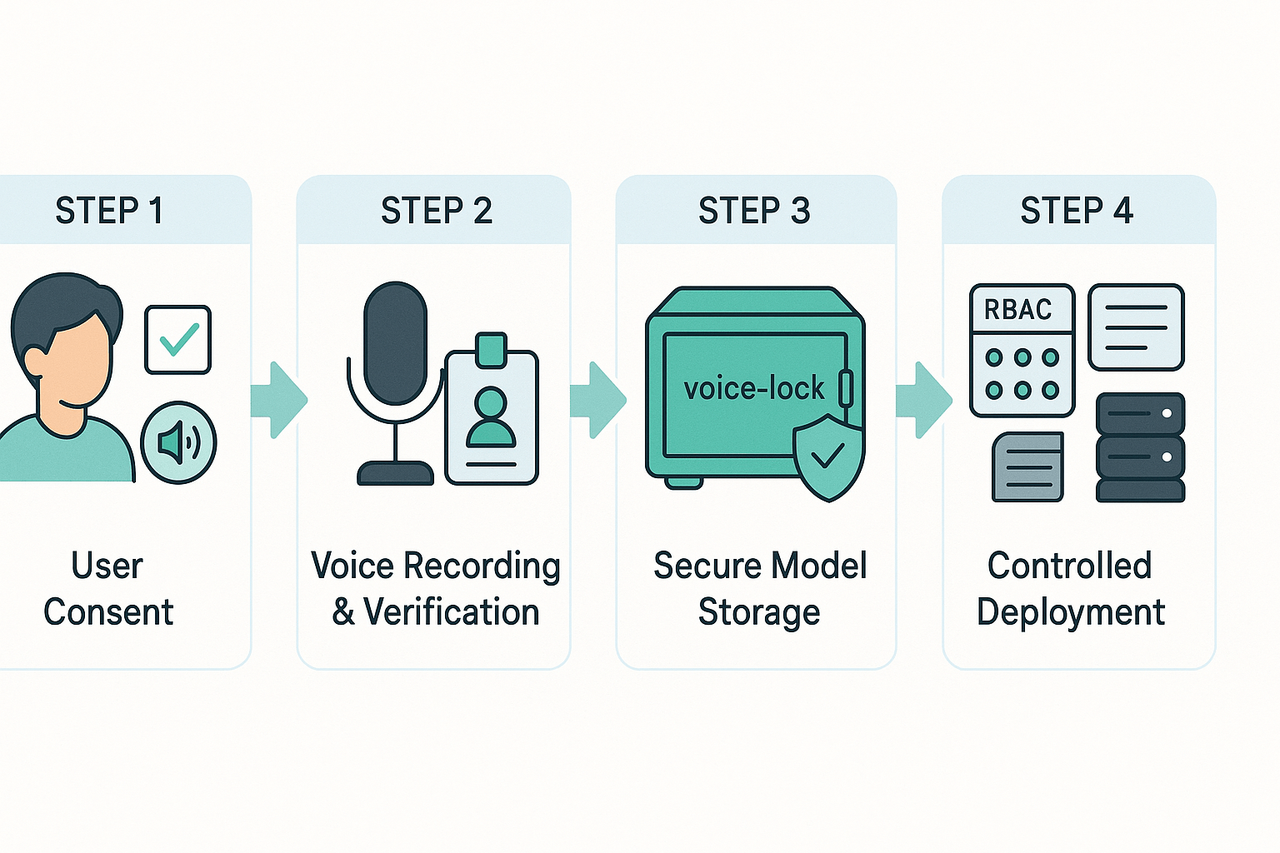

Get explicit, recorded consent from speakers before cloning voices.

-

Limit and label training data, keep retention logs, and deletion paths.

-

Apply speaker locking and anti-misuse controls to prevent impersonation.

-

Encrypt voice assets and keep auditable access logs for compliance reviews.

What are TTS regulations, and why do they matter for creators and businesses

Why regulators focus on synthetic voices

Typical legal duties for builders and creators

-

Clear consent from speakers before cloning their voice, with documented records.

-

Transparent labeling of synthetic audio so listeners know it’s not real.

-

Data minimization and secure storage, including encryption at rest and in transit.

-

Robust retention and deletion policies, plus audit logs for provenance.

-

Safety monitoring to detect misuse and takedown procedures.

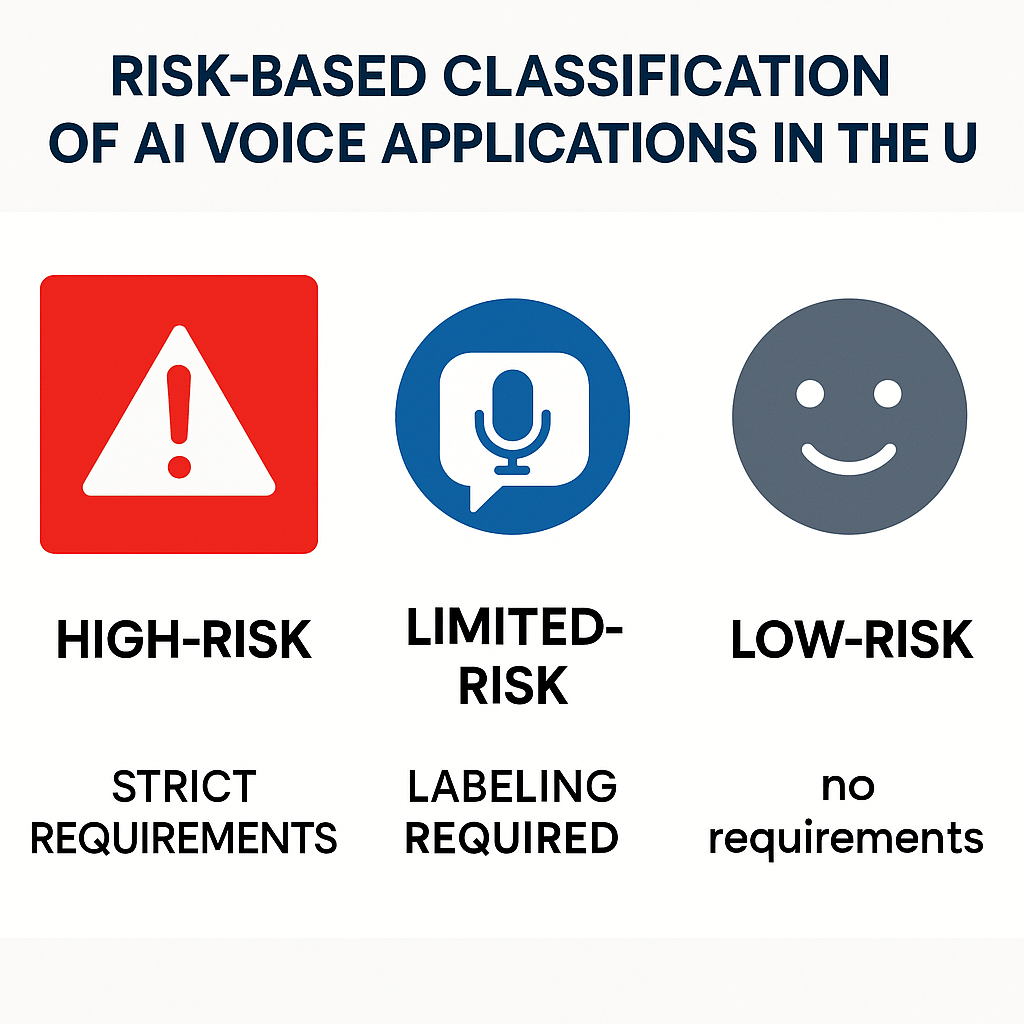

Risk-based classification

-

High-risk: deepfake voices used in legal decision-making, identity verification, or safety-critical systems.

-

Limited-risk: marketing or entertainment voices that do not mislead or affect legal rights.

-

Low-risk: pure creative TTS for fiction or internal drafts.

Transparency and labelling duties

Obligations for providers and deployers

Penalties and enforcement trends

Global landscape: UK, US, Canada, Australia and key differences

UK: duty of care and online harms

US: federal guidance, state patchwork

Canada: privacy-first, consent emphasis

Australia: emerging rules, harms and scams

|

Jurisdiction

|

Primary focus

|

Practical risk for creators

|

|

UK

|

Platform duty of care, transparency

|

High: must prove consent and safety steps

|

|

US

|

Consumer protection, state deepfake laws

|

Medium-High: patchwork compliance burden

|

|

Canada

|

Privacy and biometric consent

|

Medium: strict consent and retention rules

|

|

Australia

|

Harm prevention and fraud control

|

Medium: focus on scams and misuse

|

Compliance checklist & at-a-glance reference table

Quick pass/fail checklist

-

Consent and clear disclosure obtained and recorded: Pass/Fail.

-

Written speaker consent for cloning (sample use, languages, duration): Pass/Fail.

-

Purpose-limited processing documented (why the voice is used): Pass/Fail.

-

Data minimization: only required audio and metadata stored: Pass/Fail.

-

Retention schedule and deletion procedures exist: Pass/Fail.

-

Encryption for data at rest and in transit: Pass/Fail.

-

Role-based access controls and logs: Pass/Fail.

-

Voice-locking or fingerprinting to prevent misuse: Pass/Fail.

-

Third-party sharing policy and DPIA (if needed): Pass/Fail.

-

User-facing disclosure on generated content: Pass/Fail.

At-a-glance reference table

|

Checkpoint

|

Pass/Fail

|

Evidence to collect

|

|

Consent capture (signed file)

|

Pass/Fail

|

Consent form, timestamped audio, ID check notes

|

|

Purpose & DPIA

|

Pass/Fail

|

DPIA, product spec, risk log

|

|

Data retention policy

|

Pass/Fail

|

Retention schedule, deletion logs

|

|

Encryption & keys

|

Pass/Fail

|

Key management policy, encryption certs

|

|

Access controls & audit logs

|

Pass/Fail

|

RBAC matrix, audit logs

|

|

Voice-locking / anti-abuse

|

Pass/Fail

|

Feature spec, test logs

|

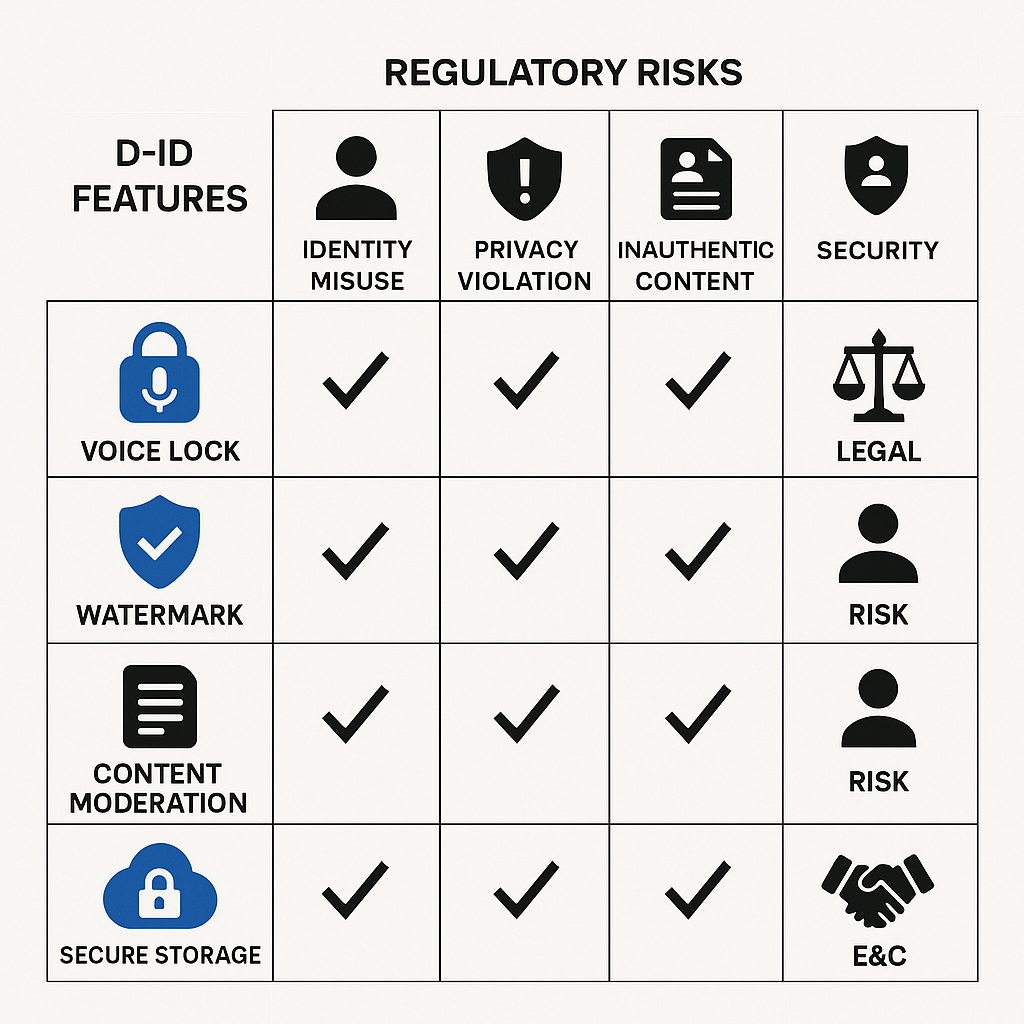

How DupDub Maps to Regulatory Requirements (Feature-to-Risk Matrix)

Feature to Risk Mapping Table

|

DupDub Feature

|

Risk Addressed

|

Compliance Role

|

|

Speaker-locked voice clones

|

Unauthorized voice cloning or misuse

|

Restricts clone generation to verified speakers, reducing impersonation risk

|

|

Consent capture & workflow

|

Absence of informed user consent

|

Stores enforceable consent tied to each voice project

|

|

Encryption at rest and in transit

|

Data interception or unauthorized access

|

Secures both voice inputs and outputs against breaches

|

|

Audit logs & version tracking

|

Lack of operational transparency

|

Provides immutable records for auditing and DPIAs

|

|

Retention & deletion API

|

Excessive data storage

|

Enables configurable retention and support for deletion requests

|

|

Enterprise & contractual controls

|

Data sharing with external processors

|

Embeds SLAs and addenda to govern third-party use

|

Best Practices for Product & Legal Teams

-

Use platform controls alongside internal compliance policies.

-

Collect visible, affirmative consent before any voice recording begins.

-

Require Data Protection Impact Assessments (DPIAs) for high-risk use cases.

-

Minimize raw voice data retention while retaining necessary logs.

-

Implement role-based access and encryption for all voice clone creation tools.

Real scenarios that trigger scrutiny

-

Unauthorized brand voice reuse. A marketing team clones a spokesperson without a signed license. The clip is used in paid ads and a complaint follows. That sparks legal and public relations issues.

-

Deepfake political messaging. A creator synthesizes a candidate's voice for satire, but it spreads as factual audio. Platforms and regulators often treat this as high risk.

-

Hidden third-party sharing. A vendor reuses recorded training data across clients. No record of consent or data lineage exists, and regulators demand audits.

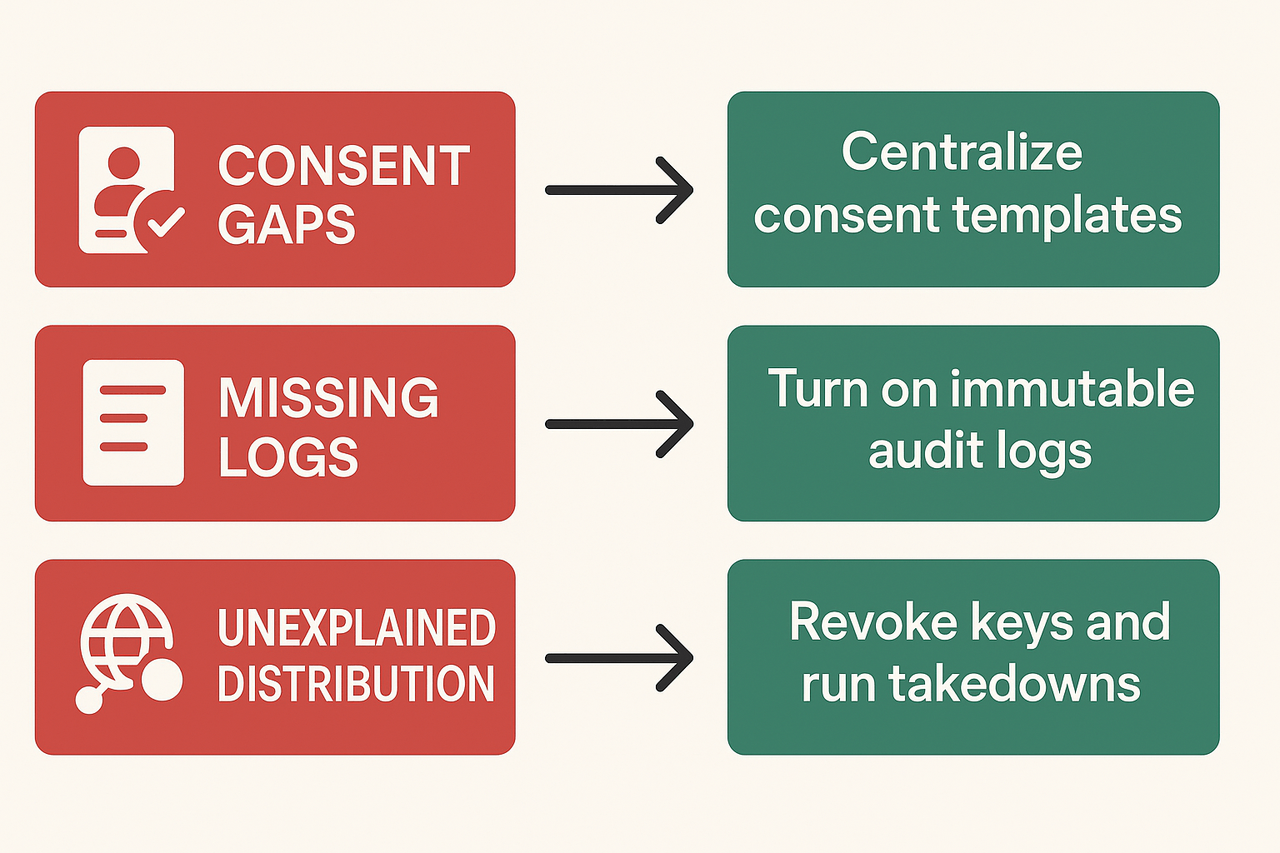

Audit red flags and quick fixes

-

Consent gaps: Red flag, missing or vague consent forms. Quick fix: centralize consent templates and store signed records with time stamps. Include scope and rights for cloning.

-

Missing logs: Red flag, no audit trail for who created or exported voices. Quick fix: enable immutable logs and exportable reports for every clone and TTS render.

-

Unexplained distribution: Red flag, cloned audio found on public platforms with no release record. Quick fix: map distribution channels, revoke keys, and run takedown requests.

Implementing Compliant Voice-Cloning Workflows and Best Practices

1. Consent Capture and User Rights

2. Secure Data and Model Handling

3. CI/CD Integration and Access Control

4. Logging, Auditing, and Incident Response

Real-world examples & hypothetical case studies

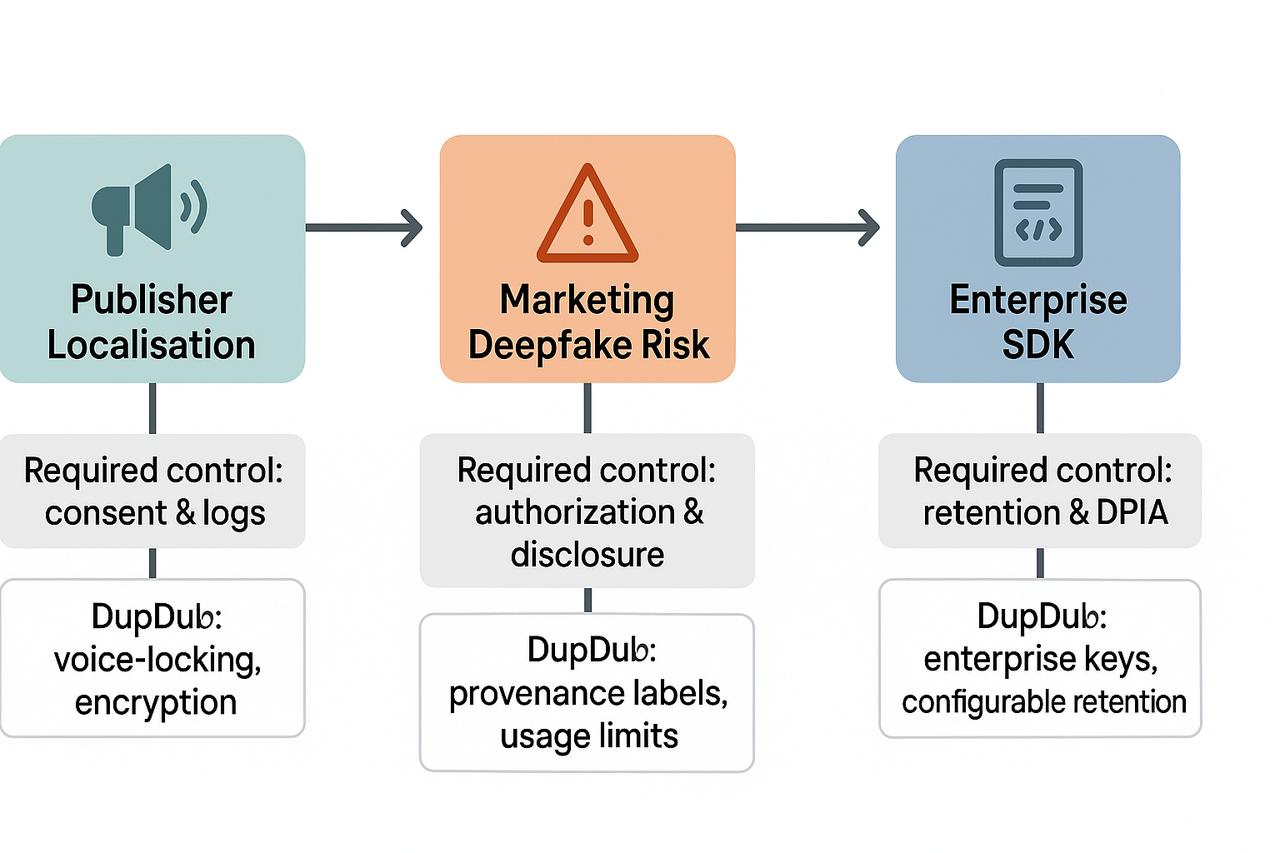

Publisher: localization pipeline

-

Required control: explicit speaker consent and documented data flow logs.

-

Recommended DupDub feature: voice-locking plus encrypted processing and audit logs.

Marketing deepfake risk

-

Required control: written authorization and clear disclosure to viewers.

-

Recommended DupDub feature: voice provenance labels and clone usage limits.

Enterprise SDK integration

-

Required control: retention policies, access controls, and DPIAs (data protection impact assessments).

-

Recommended DupDub feature: enterprise keys, configurable retention, and encryption.

Small creator: safety-first checklist

-

Required control: simple consent form and public disclosure in show notes.

-

Recommended DupDub feature: short-sample cloning with user confirmation and usage reports.

FAQ — People also ask about TTS regulations

-

Is voice cloning legal? Legality of voice cloning for creators

Laws vary by country and use. You generally need permission to clone an identifiable person for commercial or public use. See our Voice Cloning Compliance page for steps to document consent.

-

When is consent required for TTS voice cloning and recordings

Consent is usually required if a voice is personal data or clearly identifiable. For marketing or paid projects, get explicit, written consent and a usage licence.

-

How does the EU AI Act affect video content and synthetic voices

Under the EU AI Act, tts regulations require risk assessments, transparency, and recordkeeping for certain systems. Check the EU AI Act section for when synthetic voices are treated as high risk.

-

What records should compliance teams keep for synthetic voices and TTS

Keep consent forms, voice sample sources, processing logs, and retention schedules. Those records speed audits and prove lawful use.

-

What are common audit red flags in voice-cloning projects

Red flags include missing consents, no encryption, short retention logs, and no voice-locking. Fix these before you scale.

-

How can a vendor like DupDub help meet TTS compliance requirements

Look for vendor features such as consent workflows, voice-locking, encrypted processing, and GDPR alignment. Compare features in the How DupDub maps to regulatory requirements section.

-

Can I use a cloned voice in ads and commercial content

Yes, but only with clear rights and disclosures. Platforms and ad rules may also require explicit model releases; always document licenses.