TL;DR - Key takeaways

Secure voice biometrics verify that a live speaker matches a registered voice print. Voice cloning uses synthetic audio to imitate real speakers and can defeat naive audio checks. The risk is real for contact centers, fraud teams, and any voice API.

Three immediate actions you can take today:

- Enable liveness and spoof detection at enrollment and during authentication. This blocks replay attacks and many synthetic samples.

- Combine voice biometrics with a second factor or a time-limited voice challenge. Don’t rely on voice alone for high-risk transactions.

- Vet vendors for encrypted processing, clear retention limits, consent capture, and forensic logging. Run internal red-team tests using cloned voices to validate controls.

Prioritize the contact center IVR, authentication APIs, and enrollment databases for immediate hardening. Map technical controls to GDPR, HIPAA, and CCPA requirements, and assign a compliance owner.

What is voice biometrics vs. voice cloning? Clear definitions

Voice biometrics is a way to confirm identity from a person’s voice. In verification, the system asks, "Are you who you claim to be?" In identification, the system asks, "Who spoke this?" Both use voice features, but they solve different problems and have different error profiles.

Verification versus identification

Verification (1:1) checks a live sample against a stored voiceprint. It answers yes or no. Identification (1:N) compares a sample to many profiles to find a match. It can produce false positives more often and needs different performance tuning.

What is voice cloning and synthetic speech?

Voice cloning copies a speaker’s vocal characteristics from a sample. Synthetic speech, or text-to-speech, generates voice from text. Modern clones can sound very natural, and sometimes hard to tell them from a real speaker.

Why this distinction matters for authentication

Treating a voiceprint as proof of a live person is risky. Cloned or replayed audio can trick verification systems. Common consequences include account takeovers, fraudulent transactions, and brand damage.

Key practical differences to remember:

- Verification is a claim check, so it requires liveness or challenge-response.

- Identification is useful for forensics, but avoid using it alone for access control.

- Synthetic voice makes single-factor voice auth unsafe unless you add controls.

Keep voice checks as one element in a layered authentication strategy. Use liveness and secondary factors to lower fraud risk.

Why secure voice verification matters now (risks & business impact)

Voice-based fraud is growing fast, and organizations need clear reasons to act now. Voice biometrics help stop impostors, but only when systems are designed to resist synthetic audio and replay attacks. Decision-makers should view voice verification as part of layered authentication, not a lone gatekeeper.

Rising attack surface and business costs

According to Spotting the Deepfake - IEEE Transmitter (2024), in the first six months of 2024 alone, over 300 articles focused on creating detection tools for deepfakes have been published in the IEEE Xplore digital library, underscoring how quickly adversaries and researchers are advancing. As voice cloning tools improve, fraud becomes cheaper and more scalable. That raises direct and indirect costs for enterprises.

Common enterprise impacts:

- Revenue loss from fraud and chargebacks, often in high-value accounts.

- Higher contact center costs, due to longer verification and more manual reviews.

- Customer churn after identity theft or poor authentication experiences.

- Legal and compliance fines when voice data handling is weak.

Investing in secure voice verification reduces fraud exposure and operational burden. Start by mapping risk, adding anti-spoofing controls, and tying voice checks to other factors like MFA and behavioral signals. That makes the business case clear: lower losses, faster handling, and better customer trust.

How secure voice verification works — technical overview (simple)

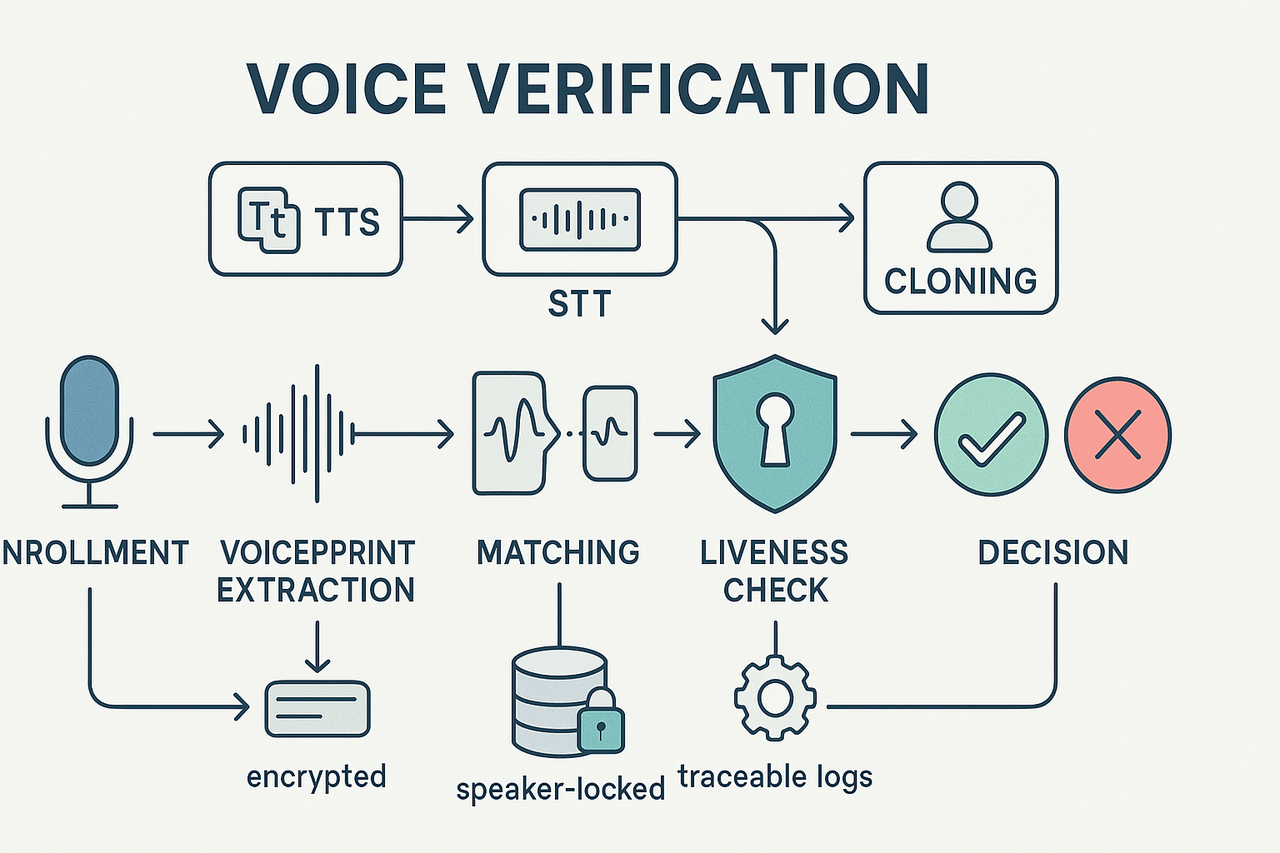

Secure voice verification begins with an enrollment phase where a speaker's voice samples are captured to generate a unique voiceprint. This voiceprint is a digital representation of distinctive vocal features and is securely stored for future comparisons.

1. Voice Enrollment and Voiceprint Generation

- Sample Capture: Users provide voice recordings, often by repeating predefined phrases.

- Pre-processing: Audio is cleaned and normalized to remove noise and ensure consistency.

- Feature Extraction: Acoustic features such as MFCCs (Mel-Frequency Cepstral Coefficients) are extracted.

- Template Creation: Features are aggregated into a secure voiceprint — a compact digital model of the user's voice.

- Storage: Templates are encrypted, locked to individual users (speaker-locking), and stored with logging metadata for traceability.

2. Verification and Scoring Process

During verification, a live voice sample is processed identically and compared to the stored voiceprint:

- Live Sample Analysis: The system extracts features from the new input.

- Matching: These features are compared against the stored model.

- Scoring: A similarity score is generated — higher scores imply higher confidence.

- Threshold Decision: If the score exceeds a set threshold, verification succeeds.

Key error metrics:

- False Accept Rate (FAR): Incorrectly accepting impostors.

- False Reject Rate (FRR): Incorrectly rejecting legitimate users.

- Equal Error Rate (EER): The Point where FAR equals FRR, often a benchmark for system tuning.

For standardized testing, frameworks such as ISO/IEC 19795-1:2021 are used.

3. Anti-Spoofing and Liveness Checks

To prevent fraud via recordings or synthetic voices:

- Challenge-Response: Users repeat random prompts.

- Spectral Analysis: Detect anomalies typical of synthesized speech.

- ML-based Detection: Identify deepfakes through AI pattern recognition.

- Multiple Modalities: Combining the above methods increases system resilience.

4. Design Considerations and Platform Controls

- Performance vs. Accuracy: More samples improve accuracy but increase processing time.

- Latency Management: Optimize algorithms for real-time use.

- Security Controls: Encrypted templates, user-bound IDs, and trace logs ensure data integrity and auditability.

Common attack vectors and practical mitigation strategies

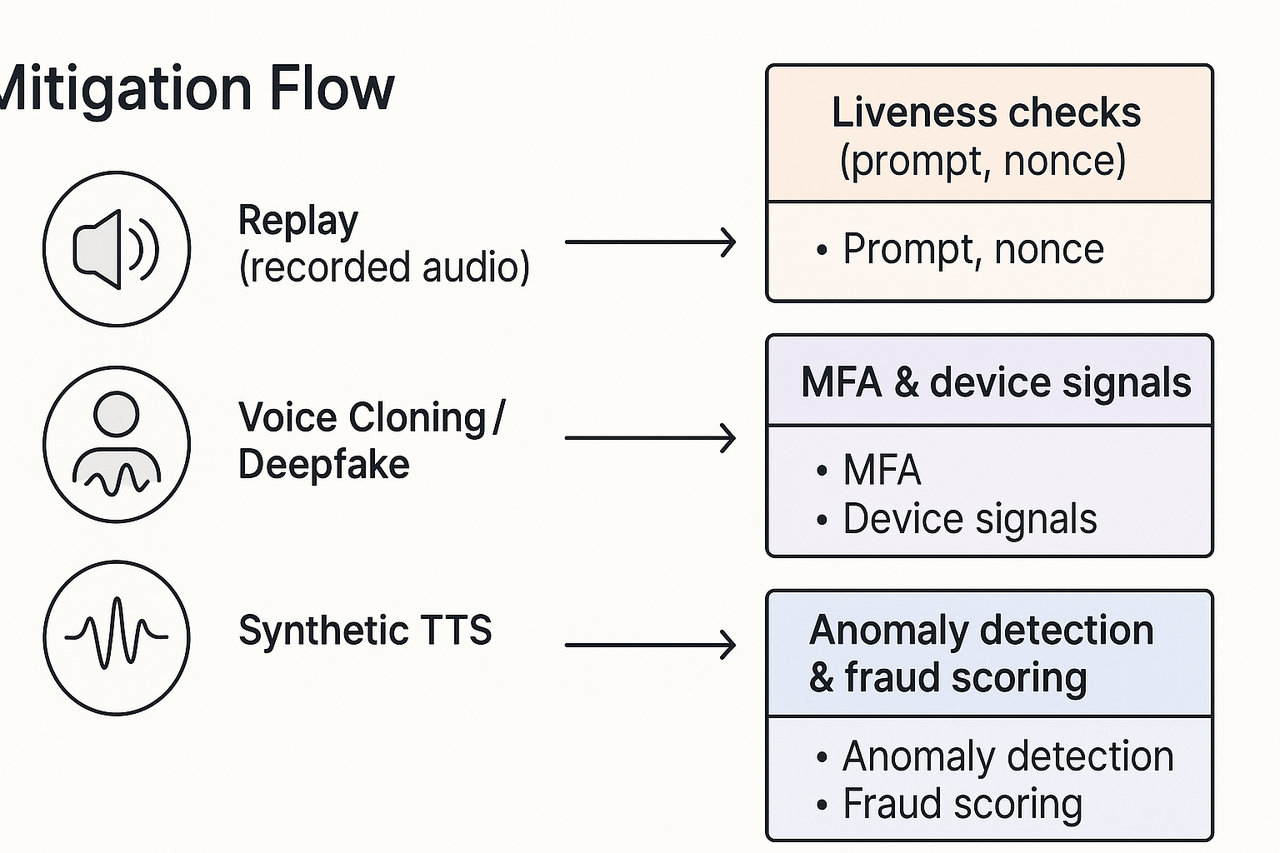

Voice biometrics can stop many frauds, but attackers use several clever tricks. The main threats are replay attacks with recorded audio, voice cloning and deepfakes, and mass abuse using synthetic TTS. Below are clear, practical defenses you can add without wrecking customer experience.

Replay attacks: stop recorded audio

Replay attacks use simple recordings to fool a system. Defenses work best when you add active checks and signal fusion. Use liveness checks (ask random phrases or short numeric prompts), session-bound nonces, and acoustic challenge-response. Combine device and channel signals, like codec fingerprints and TLS session IDs, to spot replayed streams.

Practical steps:

- Enforce randomized prompts for high-risk actions.

- Detect identical audio fingerprints and repeated timestamps.

- Reject short, low-entropy submissions or ones that fail codec checks.

Voice cloning and deepfake speech: detect and deny

Cloned voices sound real, but they leave artifacts in the spectral and temporal domains. You should not rely on voice match alone. Fuse speaker models with behavioral signals, account history, and device context. Train detectors on synthetic samples and use model-agnostic anomaly scoring.

Practical steps:

- Require step-up authentication for new voice enrollments.

- Use behavioral biometrics (typing, mouse, call patterns) alongside voice.

- Maintain a fraud score that triggers additional verification.

Synthetic TTS abuse: scale and channel controls

Attacks using TTS scale quickly. Rate-limit voice actions and validate content intent. Tag known TTS signatures, monitor burst patterns, and block unverified API keys or callers.

Practical steps:

- Apply per-account and per-number rate limits.

- Monitor for identical phrases across accounts.

- Validate call origin and require signed API tokens.

Layer and operate: reduce false accepts

A layered approach reduces false accepts and keeps UX smooth. Prioritize low-friction checks first, then step up only on risk. Log full audio metadata, run regular red-team tests, and create incident playbooks for suspected deepfakes.

Quick rollout checklist:

- Add randomized liveness prompts for high-risk flows.

- Fuse voice scores with device and behavioral signals.

- Implement rate limits and anomaly alerts.

- Log and review flagged calls weekly.

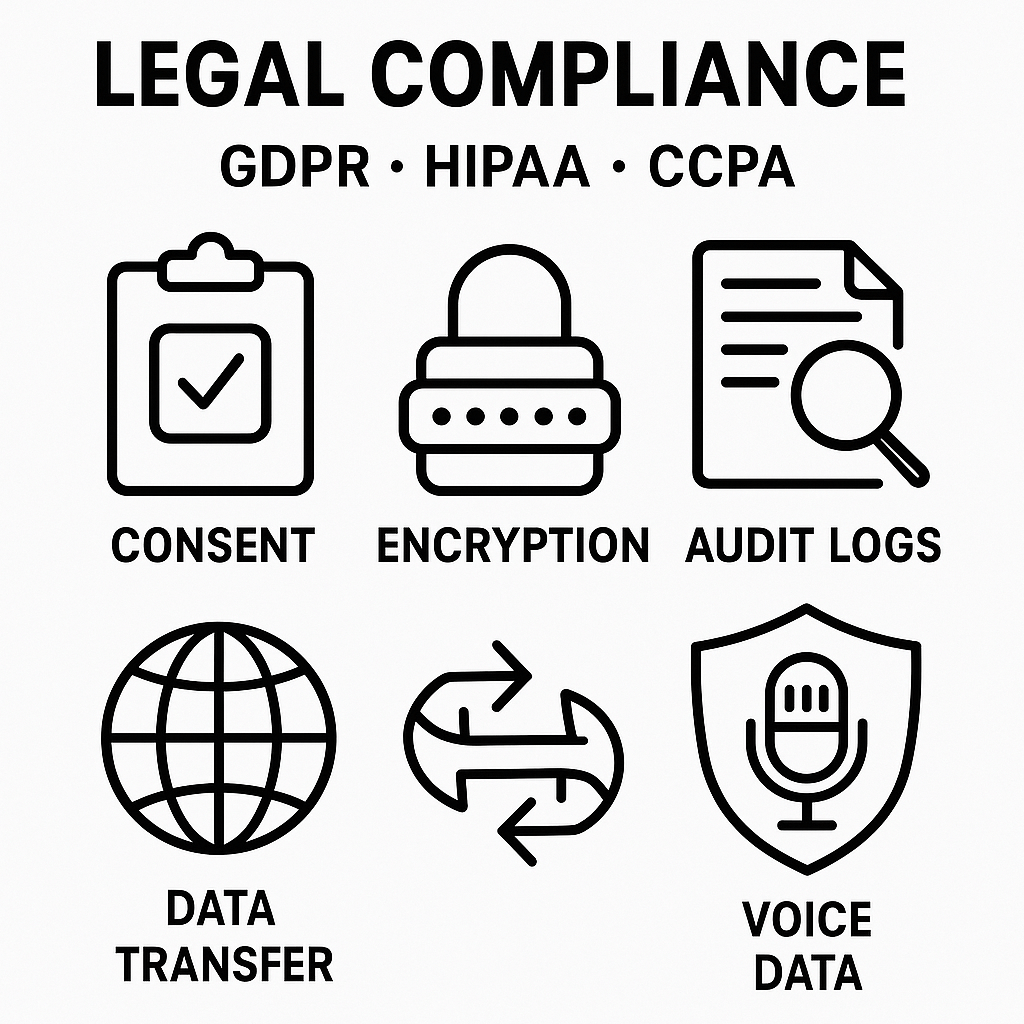

Compliance & privacy: what legal teams need to know (GDPR, HIPAA, CCPA)

Legal teams need a short, practical map from rules to technical controls for voice biometrics and voice data. Start by treating voice as personal data, then align consent, storage, retention, and access controls to the applicable law. This section shows concrete controls you can adopt today.

Key legal roles and cross-border concerns

Decide if you are a controller or processor, and record that role in contracts. If you transfer voice data across borders, use approved safeguards such as adequacy decisions or standard contractual clauses and document the legal basis.

Practical controls mapped to rules

- Minimize, then justify: collect only the voice data you need and keep a retention schedule.

- Consent and alternatives: obtain clear consent when required; note that under Article 4(11) of the GDPR, "consent" of a data subject means any freely given, specific, informed and unambiguous indication of that data subject's wishes by which he or she, by a statement or by a clear affirmative action, signifies agreement to the processing of personal data relating to him or her. EUR-Lex - 52017AE0655

- Technical measures: use encryption at rest and in transit, pseudonymization (replace identifiers), and strict key management.

- Operations: log access, use role based access control, and keep immutable audit trails.

- HIPAA specific: apply a Business Associate Agreement and limit PHI in voice samples.

- CCPA specific: support consumer access and deletion, and document sale decisions.

Implement these controls in policy, contracts, and tech, and review them with legal and security teams regularly.

Vendor selection checklist & implementation roadmap (buyer’s guide)

Start with a short, practical checklist to compare vendors fast. Look for clear controls for enrollment, spoof detection, data encryption, and retention. Make sure the vendor supports enterprise connectors for contact centers and modern APIs. Ask for a pilot or trial to validate features in your environment.

Must-have security and privacy checks

Require voice biometrics enrollment protections, liveness or spoof detection, and cryptographic key management. Verify end-to-end encryption in transit and at rest, and get a written data retention policy. Confirm role-based access controls and audit logs for all voice assets. Ensure the vendor documents how they lock cloned voices to the original speaker.

Performance and integration metrics to request

Ask for false acceptance rate (FAR), false rejection rate (FRR), and average verification latency. Request benchmarks for varied channel quality, accents, and short utterances. Confirm supported integrations: SIP, cloud contact-center platforms, REST APIs, and file-based batch workflows. Check scalability limits: concurrent verifications per second and regional deployment options.

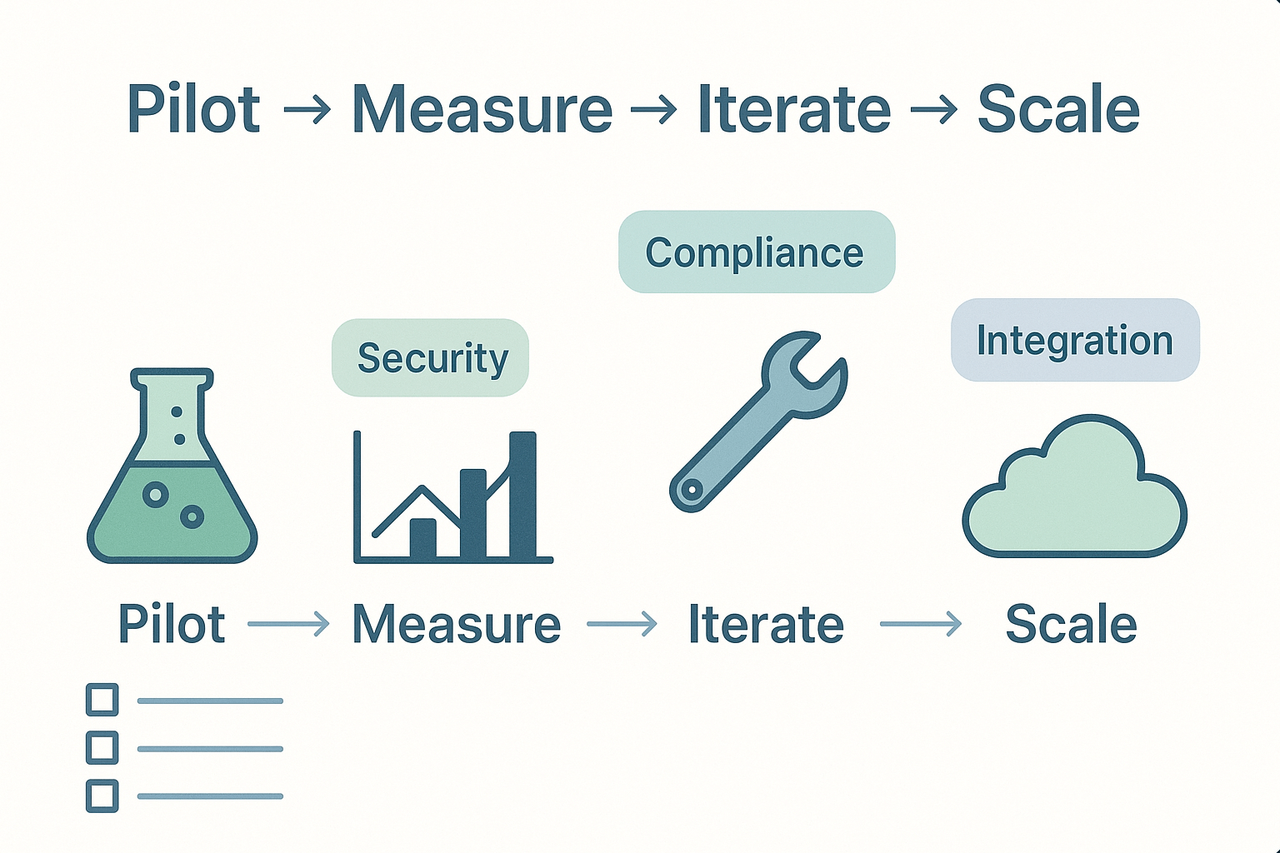

Phased rollout plan: pilot to scale

- Pilot: Define scope, sample size, and threat scenarios. Run the pilot with live traffic or a mirrored stream.

- Measure: Capture FAR, FRR, latency, and user friction. Include privacy and legal review.

- Iterate: Tweak thresholds, anti-spoof rules, and logging. Re-test with edge cases.

- Scale: Deploy to production channels, add redundancy, and automate monitoring.

Vendor questions to probe cloning controls and policy

- How do you prevent unauthorized voice cloning and enforce consent?

- What encryption standards do you use in transit and at rest?

- How long do you retain raw voice and derived models, and can we set retention rules?

- Do you provide connectors for contact-center platforms and REST APIs?

- Can we run a time-bound pilot or sandbox with production-like traffic?

Pilot access is one of the fastest ways to validate claims. Use a short proof of concept to verify security, accuracy, and integration. Keep testing focused on attack scenarios and compliance checks. This approach reduces procurement risk and speeds safe adoption.

Case vignettes: anonymized real-world examples and outcomes

Two short, anonymized vignettes show practical ROI from secure voice verification and the defensive mixes teams used. Each vignette gives the problem, the controls applied, and measurable outcomes so security and compliance leads can adapt the lessons quickly.

Stop fraud fast: financial contact center reduces identity attacks

Problem: A large bank saw a spike in social-engineered calls using cloned voices to bypass agents. Attackers used publicly available audio and targeted high-value accounts.

Defensive mix used:

- Enrolled layered voice biometrics for high-risk transactions (voiceprint plus challenge-response).

- Added device risk scoring and behavioral voice analytics (speech patterns, timing).

- Hardened agent workflows: mandatory secondary verification for transfers over threshold.

Outcome: Fraud attempts that previously succeeded dropped by about 78% within three months. Average loss per attempted fraud case fell significantly, and call handle time changed only marginally. Lesson: combine biometric verification with behavioral signals and process changes to cut fraud without harming CX.

Compliance-first rollout: healthcare tele-triage keeps audit trail and trust

Problem: A telehealth provider needed voice-based auth while meeting HIPAA and patient consent audit requirements.

Defensive mix used:

- Minimal voice cloning risk: voice models locked to the speaker and encrypted in transit.

- Consent capture recorded and tied to session metadata.

- Role-based access to voice templates and retention policies aligned with privacy law.

Outcome: The provider passed a third-party compliance audit with no major findings. Patient satisfaction scores stayed stable, and time to verify callers dropped by 40%.

These vignettes show that secure voice systems deliver measurable fraud reduction and audit readiness when paired with process and privacy controls.

Use three practical next steps to close the evaluation. Run a short pilot that includes voice biometrics and attack simulations. Keep the pilot timeline to four to six weeks.

Run a focused pilot with recorded attack tests

Pick key authentication flows and run a 4 to 6-week pilot with real agents. Include cloned samples, replayed audio, and synthetic voice tests. Measure false accept and false reject rates, and time to detect attacks.

Require vendor proof of liveness and strong encryption

Ask vendors to demo live detection and explain their encryption and key management. Verify processing and storage encryption, and that cloned voices are locked to the original speaker. Get written evidence before production rollout.

Build a compliance mapping to policy and operations

Map technical controls to GDPR, HIPAA, and CCPA requirements in plain terms. Define consent, retention, and access rules, and document incident response steps. Obtain legal and operations sign-off before wider deployment.

FAQ: People Also Ask + operational questions

-

Is voice biometrics secure against deepfakes?

Voice biometrics helps, but isn't fully secure against high-quality deepfakes. Add liveness detection, randomized challenge-response, and model fingerprinting to reduce risk.

-

How to store voice data safely for compliance?

Encrypt voice data at rest and in transit, minimize retention, and use role-based access control. Hash or tokenize identifiers and keep detailed access logs for audits.

-

When should teams not rely on voice biometrics alone for authentication?

Don't use it as the sole factor for high-value actions, account recovery, or legal ID checks. Pair with strong MFA and continuous behavioral signals.