TL;DR — What a 'Voice Conversion Ethics Code' Must Do

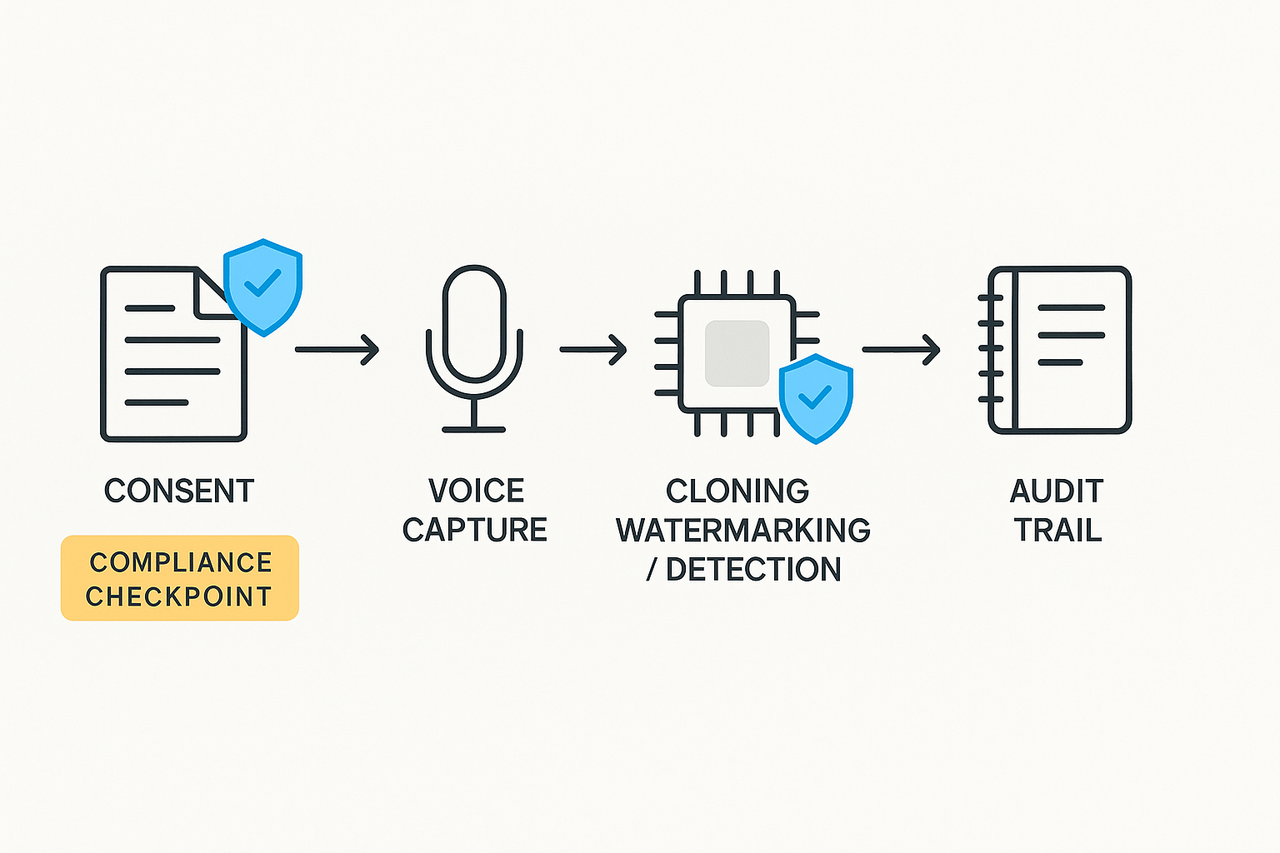

An effective voice conversion ethics code must set clear consent rules, technical safeguards like watermarking and detection, operational controls, and governance with immutable audit trails. It tells creators, product teams, and compliance officers who may clone voices, how consent is recorded, and what to log for audits.

Quick checklist:

-

Obtain recorded, time-stamped consent that names uses and rights.

-

Apply robust watermarking and detection to every synthetic output.

-

Capture provenance data and a tamperproof audit trail for each asset.

-

Limit access, require approvals, and keep a remediation playbook for misuse.

Who acts and first steps: creators, platform teams, and legal must align. Start by updating consent forms, enabling technical markers, and turning on detailed logging. Review policies quarterly and run a one-month compliance test.

Why a Voice Conversion Ethics Code Matters Now

Voice cloning and automated dubbing are moving from labs into every creator toolkit. A clear voice conversion ethics code is now essential to stop misuse, protect talent, and keep public trust. Rapid adoption means harms show up fast: impersonation, unauthorized commercial reuse, and erosion of audience confidence.

Who’s most at risk and how policies protect them

-

Voice actors: synthetic copies can undercut contracts and damage earning power. Clear consent rules protect income and reputation.

-

Music producers: sampled or cloned vocals can trigger copyright and licensing disputes. Policies reduce legal exposure.

-

Audiences and institutions: listeners may be deceived, and brands risk reputational harm. Transparency restores trust.

-

Creators and platforms: failing to police misuse invites takedowns and regulatory scrutiny. Rules create a defensible stance.

Ethics codes are practical, not just idealistic. They define consent workflows, recording and storage rules, and removal procedures. They also reduce legal risk and speed enterprise adoption. Teams evaluating tools should prefer privacy-first platforms with audit trails and watermarking, like those that lock clones to originals, to make compliance operational and scalable.

The Global Regulatory Landscape: Laws & Emerging Rules to Watch

Voice conversion ethics code must map laws and emerging rules that affect consent, biometric data, and liability. Start by treating synthetic voice as a privacy and biometric risk. This section summarizes current U.S. state and federal activity, EU rules like GDPR and the proposed AI Act, and differing APAC approaches.

U.S. federal and state activity

Many U.S. states already restrict biometric uses. Notably, the

Illinois Biometric Information Privacy Act (2008) defines "biometric identifier" as "a retina or iris scan, fingerprint, voiceprint, or scan of hand or face geometry." Expect more state bills on deepfakes and voice fraud. Operational check: assume voiceprint rules may apply to cloned voices.

EU and UK: privacy and AI rules

GDPR treats personal audio as personal data when it identifies someone. The proposed EU AI Act will add rules for high-risk AI systems, possibly covering voice cloning used in public or commercial services. Teams must map lawful basis, data minimization, and DPIAs (data protection impact assessments).

APAC and other regions

APAC laws vary by country, from strict biometric rules to lighter AI guidance. Some jurisdictions focus on consent and consumer harm, others on national security. Track local consent, retention, and cross-border transfer rules.

Cross-jurisdiction monitoring checklist

-

Maintain a regulatory map, updated monthly.

-

Record explicit consent with time stamps and scope.

-

Classify voice assets as biometric or personal data.

-

Log processing, retention, and deletion actions.

-

Conduct DPIAs and regular legal reviews.

-

Train teams on local disclosure and opt-out rules.

Practical guidance: when the law is unclear, apply the strictest nearby standard as a default. That aligns legal obligations with operational controls, and lowers compliance risk.

Voice conversion brings big gains for creators, and big risks if unchecked. A clear voice conversion ethics code helps teams weigh both sides. In this section, we pair harms with proven benefits, share first-person accounts, and pull practical lessons into checklist items teams can adopt.

Real harms to watch

Misattribution and brand damage happen fast. Deepfakes can make an artist sound like they endorsed a product. That costs trust and revenue. Scams use cloned voices to trick finance teams or relatives. Emotional harm matters too: actors say their voice is part of their livelihood and identity, and misuse can feel like theft.

-

Misattribution: fake endorsements and false statements.

-

Financial risk: fraud, lost deals, or takedown costs.

-

Emotional harm: distress, job loss, and reputational damage.

"I woke to message threads asking if I’d approved a commercial," says a voice actor. "It felt like someone stole my voice and my career security." This quote shows the human cost behind policy choices.

Clear benefits and use cases

When used ethically, voice cloning improves access and efficiency. Localization teams scale dubbed video to many languages. Accessibility teams generate audio for visually impaired learners. Studios speed up ADR (automated dialogue replacement) and preserve an actor’s style when the actor consents.

-

Accessibility: narrated lessons and audio descriptions.

-

Localization: fast, consistent multilingual dubbing.

-

Creative workflows: non-destructive ADR and voice continuity.

"As a product manager, I’ve cut localization time by weeks, while keeping consent checks in place," says a product manager. "That balance drives adoption and trust."

Lessons that feed an ethics checklist

-

Require verifiable consent, logged in an audit trail.

-

Limit reuse and set expiry for synthetic voices.

-

Tag outputs with a robust watermark or provenance data.

-

Offer takedown and dispute routes with SLA targets.

These lessons point to clear operational controls. Add them to the creator and enterprise checklists to reduce risk while keeping benefits. The next sections show how to turn these lessons into policies, tech controls, and governance steps.

Technical Safeguards: Watermarking, Detection & Provenance

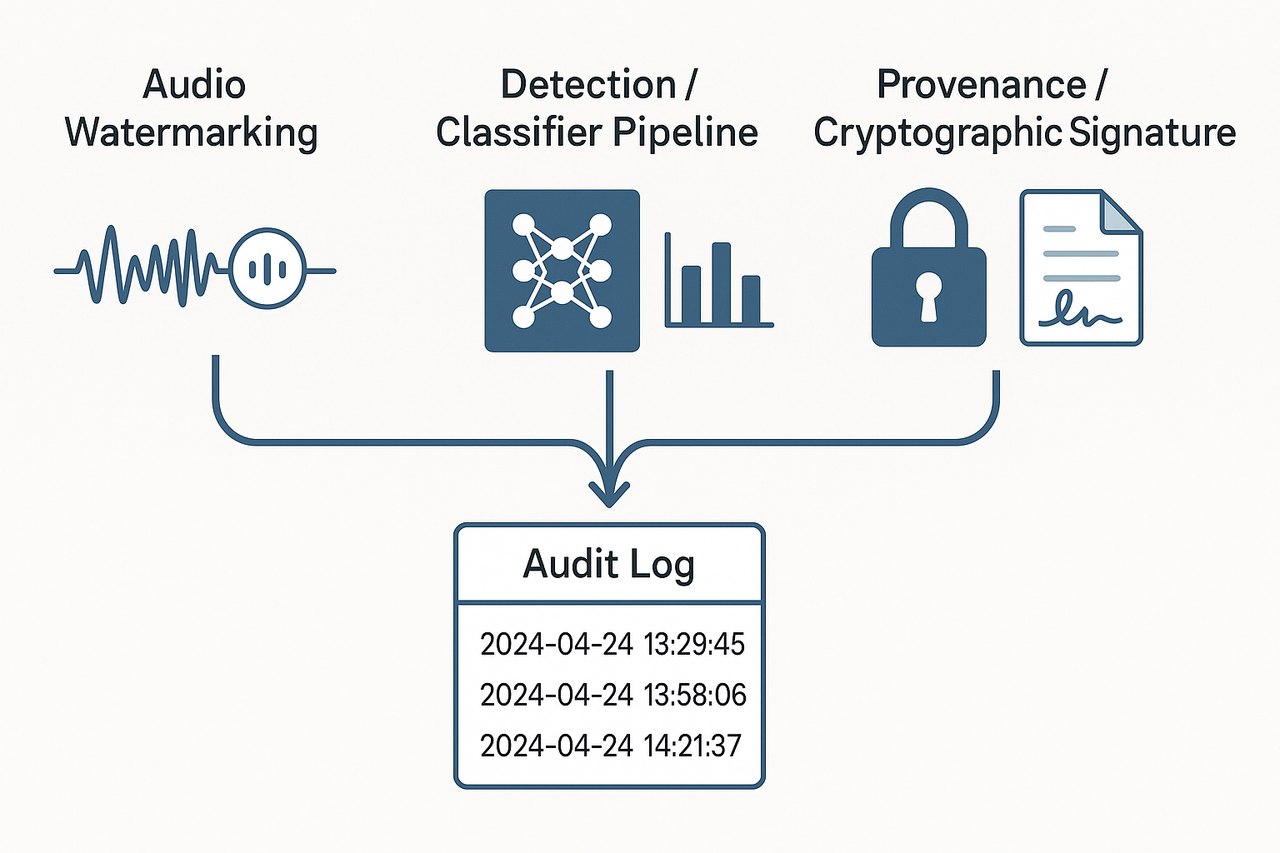

A practical voice conversion ethics code must tie policy to measurable tech controls. Start with three complementary defenses: audio watermarking, deepfake detection systems, and provenance through cryptographic signing and metadata. These tools make misuse traceable and give compliance teams audit trails.

Audio watermarking: embed signals you can check later

Watermarks hide a recoverable marker inside audio so you can prove the origin. Use robust, inaudible marks for published files, and fragile marks for staged verification. Test against common transforms, like compression and re-recording, to measure real-world resilience.

Detection systems: signal analysis versus model classifiers

Detectors fall into two camps: signal analysis looks for artifacts in the waveform, model classifiers learn patterns in features or embeddings. Use both, because signal tests catch simple tampering, while classifiers find learned synthetic artifacts. The ASVspoof 2021 challenge introduced a Deepfake (DF) task to evaluate the detection of manipulated, compressed speech data posted online, informing detector benchmarks

ASVspoof 2021: Towards Spoofed and Deepfake Speech Detection in the Wild.

Provenance: cryptographic signatures and locked metadata

Sign files with a private key and embed public-verifiable signatures in manifests. Pair signatures with immutable logs that record consent, sample source, and processing steps. Keep metadata minimal to protect privacy, but rich enough for audits.

Choose layered defenses and make them auditable

-

Core stack: watermark + dual-mode detector + cryptographic manifest.

-

Acceptance tests: false positives and robustness thresholds.

-

Regular re-validation against new synthetic samples.

-

Tamper-evident audit logs and retention policy.

Expect tradeoffs: stricter detection raises false positives, while strong watermarks may affect audio quality or privacy. Prioritize based on risk: public releases need the fullest stack, internal prototypes can start lighter and scale up. Make every control testable and logged for compliance reviews.

Operationalizing Ethics: Checklists for Creators and Enterprises

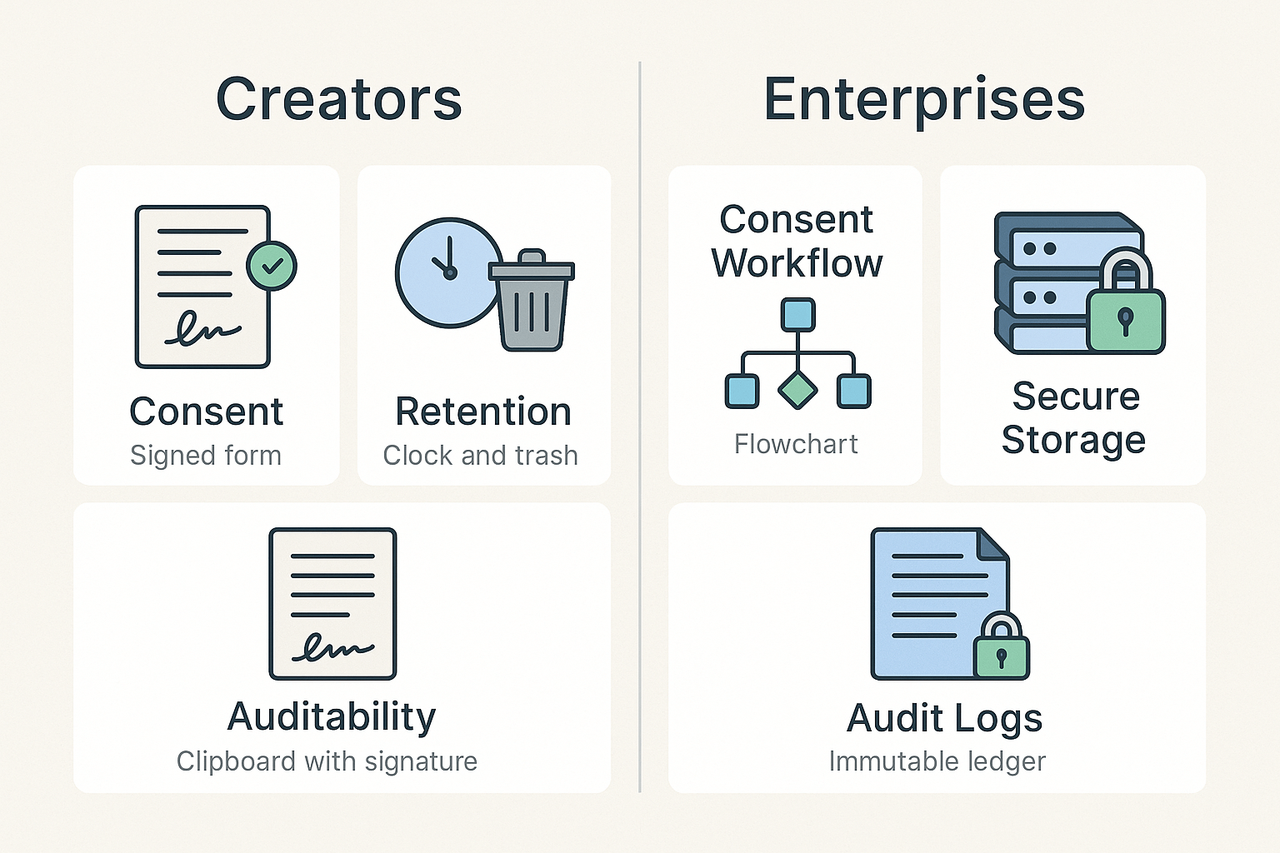

A practical ethics code must be usable, not just aspirational. This short guide shows role-based checklists and a simple 30-day rollout teams can follow. Use it to set consent, data handling, retention, and audit rules that protect talent and reduce risk for organizations using voice conversion technology.

Creators and Voice Actors: Quick Checklist

Creators need clear, short rules they can follow every time they record. Keep records, get informed consent, and limit what you share.

-

Use plain-language consent: who will clone the voice, the purposes, the languages, and reuse limits. Include opt-out terms.

-

Signed contract terms: scope, payment, rights, revocation process, and dispute handling. Keep a copy for audits.

-

Data minimization: share only the audio needed for the agreed use. Avoid extra takes or personal data.

-

Retention policy: specify how long samples and models are kept, and deletion steps after the term ends.

-

Provenance and markers: require watermarking or metadata tags so cloned audio is traceable.

-

Audit record: keep a log of consents and releases linked to each clone (timestamps, signer identity).

Enterprise and Product Teams: Operational Checklist

Enterprises must make technical and legal controls repeatable and auditable across teams.

-

Consent workflow: implement recorded consent templates and automated capture before cloning.

-

Contract standards: standard clauses for third parties, sublicensing, and rights reversion.

-

Data handling: encrypt samples at rest and in transit, and limit access by role.

-

Retention and purge: automated retention rules, certified deletion, and metrics for compliance reviews.

-

Audit logs: immutable logs for who created a clone, when, why, and what model was used (include hash or ID).

-

Detection and watermarking: deploy robust watermarking and detection tools for downstream use.

-

Third-party review: schedule periodic external audits and red-team tests.

30-day rollout: Stand up baseline controls

Start small and iterate weekly.

-

Days 1–7: Draft consent language, basic contracts, and role matrix. Assign owners.

-

Days 8–14: Implement capture and storage rules, enable encryption, and limit access.

-

Days 15–21: Add automated retention and deletion workflows, and start logging events.

-

Days 22–30: Run an internal audit, test watermarking/detection, and schedule third-party review.

Platforms that lock clones to the original speaker and use encrypted processing reduce friction for both creators and enterprises by making consent, auditability, and secure handling easier to enforce.

Governance & Enforcement: Audits, Transparency and Third‑Party Oversight

A governance framework makes an ethics code enforceable, not just aspirational. Start with clear roles, measurable inputs, and public reporting so creators and audiences can trust processes. A strong voice conversion ethics code should tie policy to audits, red-team tests, and fast dispute resolution.

Set measurable governance inputs

-

Audit frequency: internal reviews quarterly, independent audits annually.

-

Red-team testing: deepfake resistance tests at least twice a year.

-

KPIs: takedown time, false-positive rates for detectors, percentage of voice uses with verified consent.

-

Record retention: immutable logs for 2–7 years, depending on region and contract.

Audits, transparency, and dispute steps

Create an internal ethics committee for intake and a separate compliance team for enforcement. Use independent third-party audits and prefer certifiers that meet

ISO/IEC 27006-1:2024, which specifies additional requirements for bodies that audit and certify information security management systems (ISMS) in accordance with ISO/IEC 27001, ensuring certifications are issued competently, consistently, and impartially. Publish transparency reports quarterly with anonymized counts of clones created, takedowns, and audit outcomes.

For disputes, follow a three-step flow: 1) rapid suspension on credible complaint, 2) neutral review by an independent reviewer, 3) remediation or takedown with appeal rights. These steps reduce risk and show creators you respond quickly and fairly.

Future Trends & How Responsible Products (like DupDub) Should Evolve

Regulators and platforms will demand clearer proof about who made a voice, and when. Over the next five to ten years, we expect stronger provenance mandates, native watermarking standards, and widespread detection services. A practical voice conversion ethics code will help teams follow rules and keep creators safe.

Prioritize provenance, watermarking, and detection

Product roadmaps should bake in tamper-evident metadata and native watermarking. Detection-as-a-service will run checks before publishing, and exportable audit trails will be standard. Vendors must make verification fast and reliable for large-scale workflows.

Key product features to include:

-

Granular consent flows, with signed capture records.

-

Encrypted clone locking so a synthetic voice always ties to the original speaker.

-

Exportable, searchable audit trails for every cloning and use event.

-

Built-in watermarking and hosted detection APIs for platform checks.

Balance openness with safety

Keep SDKs and voice styles flexible for creators, while gating risky actions. Use tiered access, review queues, and enterprise approvals for high-risk exports. Offer developer tools and clear guardrails so creativity can continue without raising harm.

Plan compliance milestones, run third-party audits, and update your code often as laws and tech shift. Try responsible tools and request an enterprise compliance review to see how your workflows measure up.

FAQ — Common Questions about Voice Conversion Ethics Codes

-

Can voices be cloned without consent? (voice cloning without consent)

No. A good voice conversion ethics code forbids cloning without explicit, recorded consent. Keep signed consent forms, audio samples, and an audit trail for every clone.

-

How do I verify a voice's provenance or authenticity? (verify voice provenance)

Check embedded metadata, cryptographic signatures, watermarking, and retention logs. Combine detection tools with manual forensic review when needed.

-

What are enterprise compliance obligations for voice cloning? (enterprise compliance for voice cloning)

Enterprises must document consent, run Data Protection Impact Assessments, vet vendors, enforce retention rules, and train staff on safe use.

-

How reliable is watermarking and detection? (watermarking reliability)

Watermarking helps, but it is not foolproof. Use layered controls: robust watermarking, active detectors, provenance records, and periodic red-team tests.

-

Where do I report misuse or deepfake audio? (report voice misuse)

Report to the hosting platform or publisher, follow your incident response, and notify law enforcement for fraud or harm.

-

Quick next step: how do I pilot a safe workflow? (pilot a safe voice conversion workflow)

Start with consent templates, locked clones, automatic watermarking, and audit logs. Run the 30-day rollout checklist in Operationalizing Ethics.